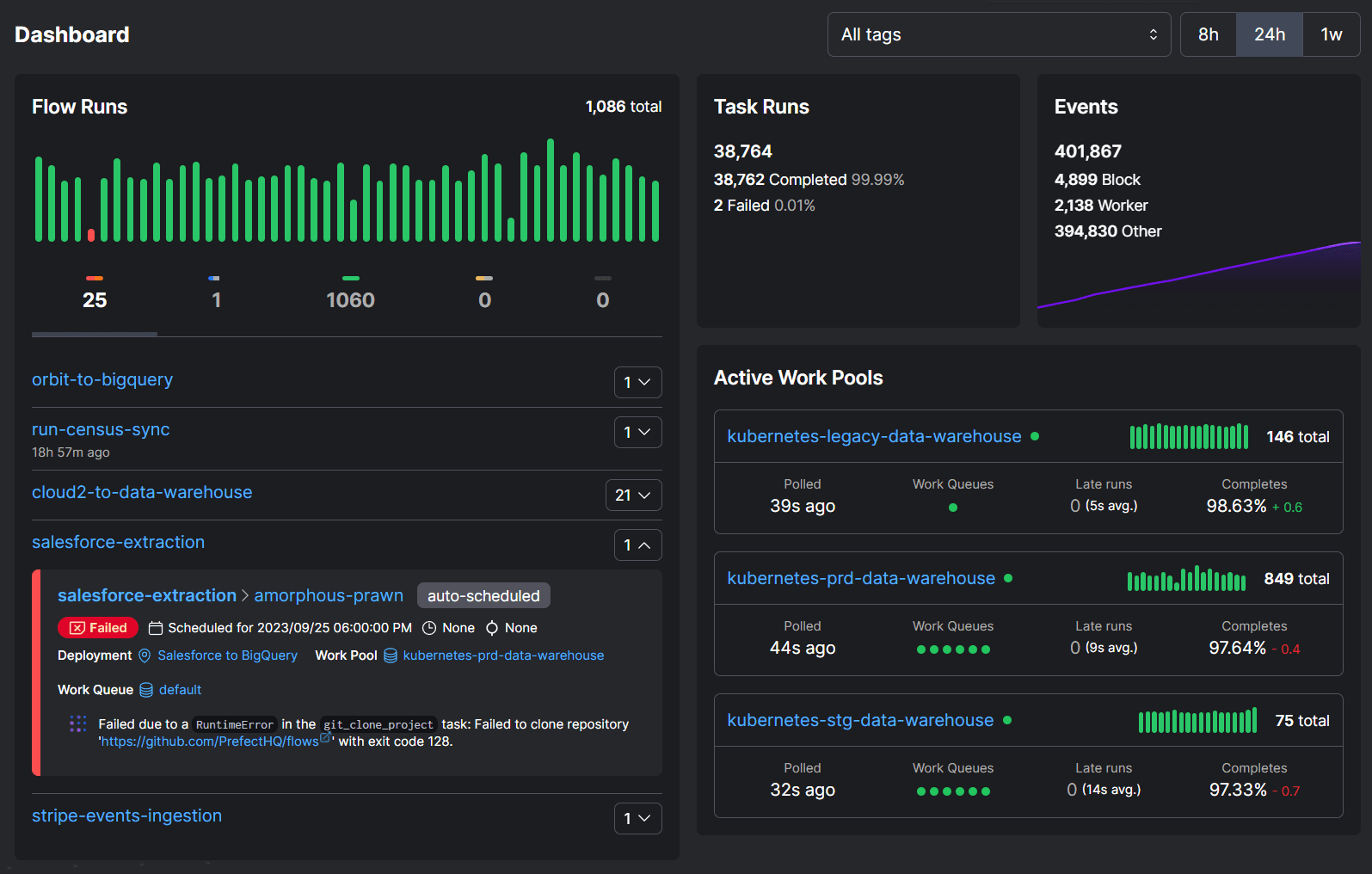

Snowflake and Prefect: Architecture Built for Production

The fastest and most secure way to deploy workflows to production while retaining full visibility into data quality.

Standardize Snowflake Usage

Orchestrate all your data into Snowflake from Prefect to ensure consistency and traceability of your data pipelines. Whether you're using dbt or an event-driven workflow, Prefect makes all task dependencies explicit.

1from prefect_dbt_flow import dbt_flow

2from prefect_dbt_flow.dbt import DbtProfile, DbtProject

3

4my_flow = dbt_flow(

5 project=DbtProject(

6 name="jaffle_shop",

7 project_dir="jaffle_shop/",

8 profiles_dir="jaffle_shop/",

9 ),

10 profile=DbtProfile(

11 target="dev"

12 )

13)

14

15if __name__ == "__main__":

16 my_flow()Supporting Business Critical Data at Crumbl

Chandler Soto, Data Engineer at Crumbl, supports various models for forecasting at Crumbl, a $1B business. The business critical function has confidence because of the visibility they gain with Prefect into their Snowflake data.

More Reading

Read about how you can scale your scheduled and event-driven critical data pipelines with Prefect and Snowflake.

Our latest release: interactive workflows, incident management, and custom workflow metric alerts. Monitor any type of work with Prefect.

Wondering why versatility is important? The pipeline mental model for data has constrained us. Prefect lets you have flexibility responding to data.

Downsides emerge with Airflow at scale: handling different use cases for workflow times and triggers, onboarding new team members, and staying lean with infrastructure cost. Prefect addresses these complexities in a first-class way.