Real-Time Workflows with Debezium and Prefect

The move from batch schedules to real-time, event-driven systems is transforming how organizations work with data. While cron jobs and scheduled processes still have their place, modern businesses increasingly demand instant insights and immediate action.

Unfortunately, legacy systems that were built in an era of web front-ends, middle tiers, and monolithic databases often lack native mechanisms for triggering remote processes. Rewriting them for modern architectures can be expensive, risky, and yield little return on investment.

So how can you modernize without rewriting? Enter Change Data Capture (CDC).

CDC: Modernizing Without Touching Legacy Code

Change Data Capture continuously monitors your database’s transaction logs, emitting change events without modifying your application code. Your legacy app keeps running as-is, while downstream systems can react instantly to new or updated data.

Debezium: Real-Time CDC for Popular Databases

Debezium is an open-source, distributed platform that streams database changes in real time. It supports PostgreSQL, MySQL, and more. A perfect bridge from old systems to modern event pipelines.

Debezium reads transaction logs, converts them into event streams, and delivers them to sinks like Kafka, Pulsar, or even plain HTTP endpoints. No invasive schema changes, no rewrites.

Adding Prefect to Orchestrate Real-Time Workflows

Debezium supports CloudEvents, a standardized way of describing event data. By pairing this with Prefect’s webhooks, you can trigger automated workflows the instant a change occurs.

Here’s the high-level flow:

- Debezium detects a database change.

- Debezium HTTP Sink sends a CloudEvent to a Prefect webhook.

- Prefect Automation instantly triggers the relevant workflow.

This setup turns your database into a live event source without modifying application logic.

Local Setup: PostgreSQL + Debezium + Prefect

To demonstrate this integration, we’ll create a simple local environment using Docker Compose. This setup will simulate a real-world scenario where you have a legacy PostgreSQL database with a customer database and want to capture its data changes in real time using Debezium, then feed those changes directly into Prefect workflows via webhooks.

services:

postgres:

image: postgres:17

environment:

POSTGRES_USER: postgres

POSTGRES_PASSWORD: postgres

POSTGRES_DB: app

command: ["postgres", "-c", "wal_level=logical"]

ports:

- "5432:5432"

volumes:

- ./init.sql:/docker-entrypoint-initdb.d/init.sql

debezium-server:

image: quay.io/debezium/server:3.2

volumes:

- ./debezium/config:/debezium/config

depends_on:

- postgresHere, PostgreSQL is configured with logical replication enabled via wal_level=logical, which is a prerequisite for CDC. The mounted init.sql script will set up the demo schema and replication roles automatically on startup.

Database Initialization

The init.sql will handle a few requirements for the local CDC test environment:

- It defines a

customerstable to serve as our example data source. - It configures Postgres to provide full row images for update events and grants a replication role (

replicator) the minimal privileges needed for Debezium to read from the database’s logical replication stream. - It sets up a publication that only includes

INSERTevents for thecustomerstable, helping us focus on a specific event type.

-- create the application table

CREATE TABLE public.customers (

id SERIAL PRIMARY KEY,

first_name TEXT NOT NULL,

last_name TEXT NOT NULL,

email TEXT UNIQUE NOT NULL

);

-- ensure full row image for updates

ALTER TABLE public.customers REPLICA IDENTITY FULL;

-- create a replication role

CREATE ROLE replicator WITH REPLICATION LOGIN PASSWORD 'replicator';

GRANT CONNECT ON DATABASE postgres TO replicator;

GRANT USAGE ON SCHEMA public TO replicator;

GRANT SELECT ON public.customers TO replicator;

-- publish only inserts to the logical replication stream

CREATE PUBLICATION dbz_demo_events FOR TABLE public.customers WITH (publish = 'insert');By limiting the publication to only INSERT events, we avoid unnecessary noise in our event stream. This setup mimics how you might selectively capture changes from a busy production database without overloading downstream systems.

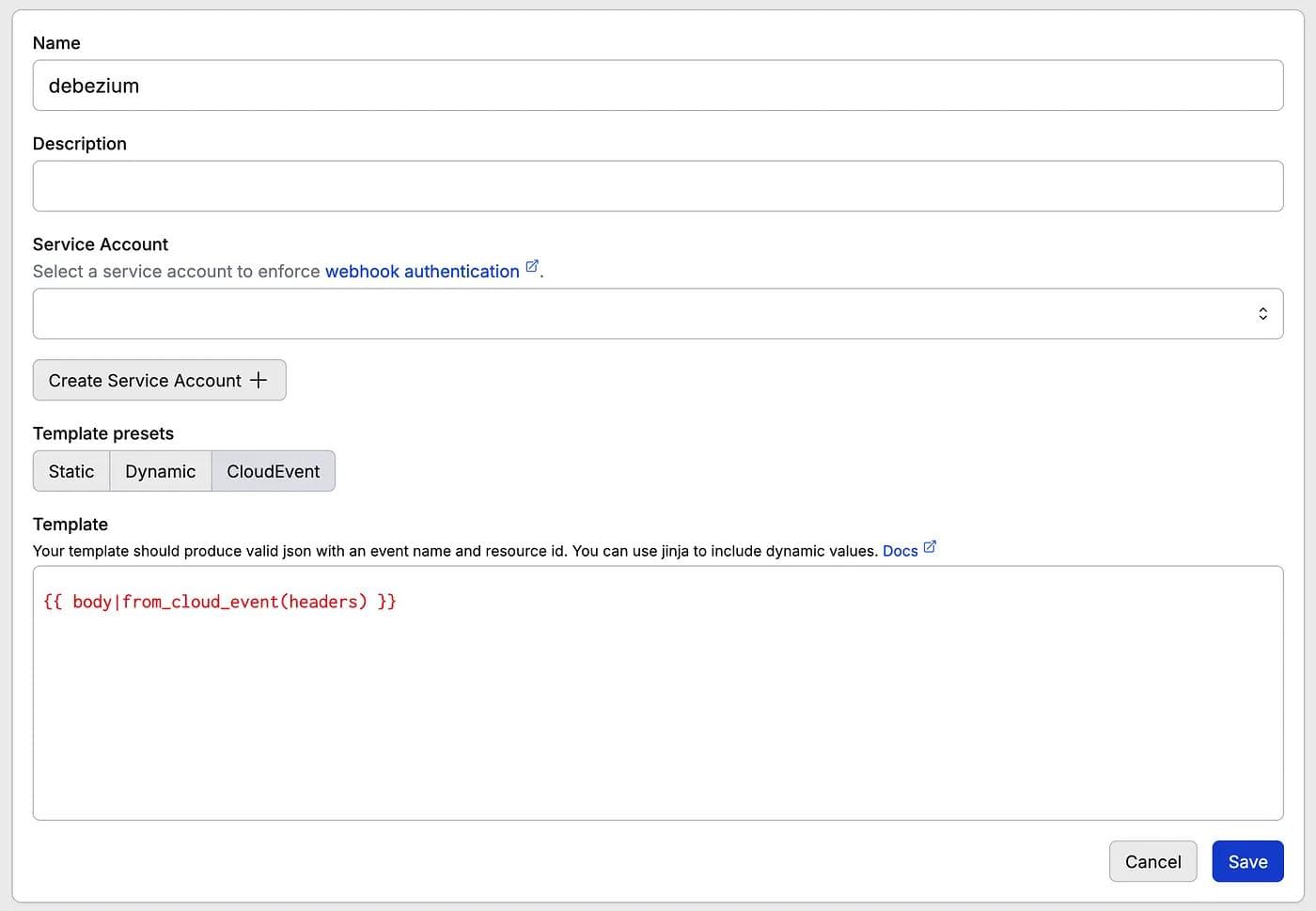

Create a Prefect Webhook

To receive and process events emitted by Debezium, you’ll need to configure a webhook in Prefect Cloud.

Note: Webhooks are available exclusively on Prefect’s paid subscription plans.

Press enter or click to view image in full size

Using the{{ body | from_cloud_events(headers) }} CloudEvent template allows us to process the entire payload and convert it into a JSON structure for use in a future Prefect automation.

Debezium Configuration

Our Debezium configuration connects the PostgreSQL source and the Prefect webhook destination. By using the HTTP sink type, we can forward events directly to Prefect benefiting from Debezium’s CDC capabilities.

# == Sink (HTTP) ==

debezium.sink.type=http

debezium.sink.http.url=https://api.prefect.cloud/hooks/<hash>

# == Source (PostgreSQL) ==

debezium.source.connector.class=io.debezium.connector.postgresql.PostgresConnector

debezium.source.database.hostname=postgres

debezium.source.database.port=5432

debezium.source.database.user=replicator

debezium.source.database.password=replicator

debezium.source.database.dbname=app

debezium.source.plugin.name=pgoutput

debezium.source.publication.name=dbz_demo_events # matches CREATE PUBLICATION statement

debezium.source.publication.autocreate.mode=disabled

# == Format ==

debezium.format.value=cloudevents

The key detail here is debezium.format.value=cloudevents, which ensures all events are wrapped in the CloudEvents standard format. This makes them easy for Prefect to interpret and reliably process, complete with metadata describing the nature and context of each change event.

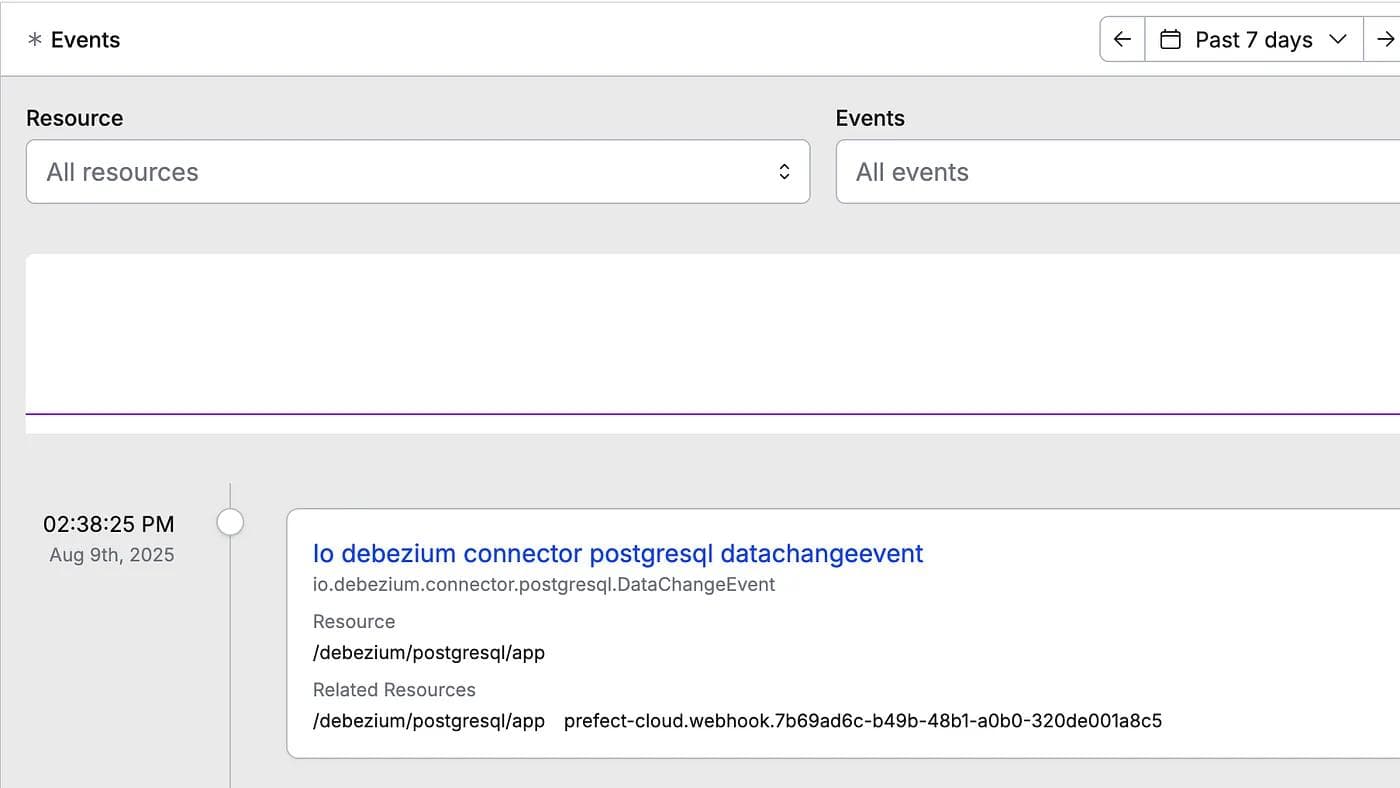

Events in Prefect

With our Debezium configuration in place, we can now examine exactly what Prefect receives when a database change is forwarded.

For example, inserting a row:

INSERT INTO public.customers (first_name, last_name, email) VALUES ('Brendan', 'Dalpe', 'brendan.dalpe@prefect.io')

will produce an event with the type io.debezium.connector.postgresql.DataChangeEvent. We can see this on the Events page in the Prefect UI.

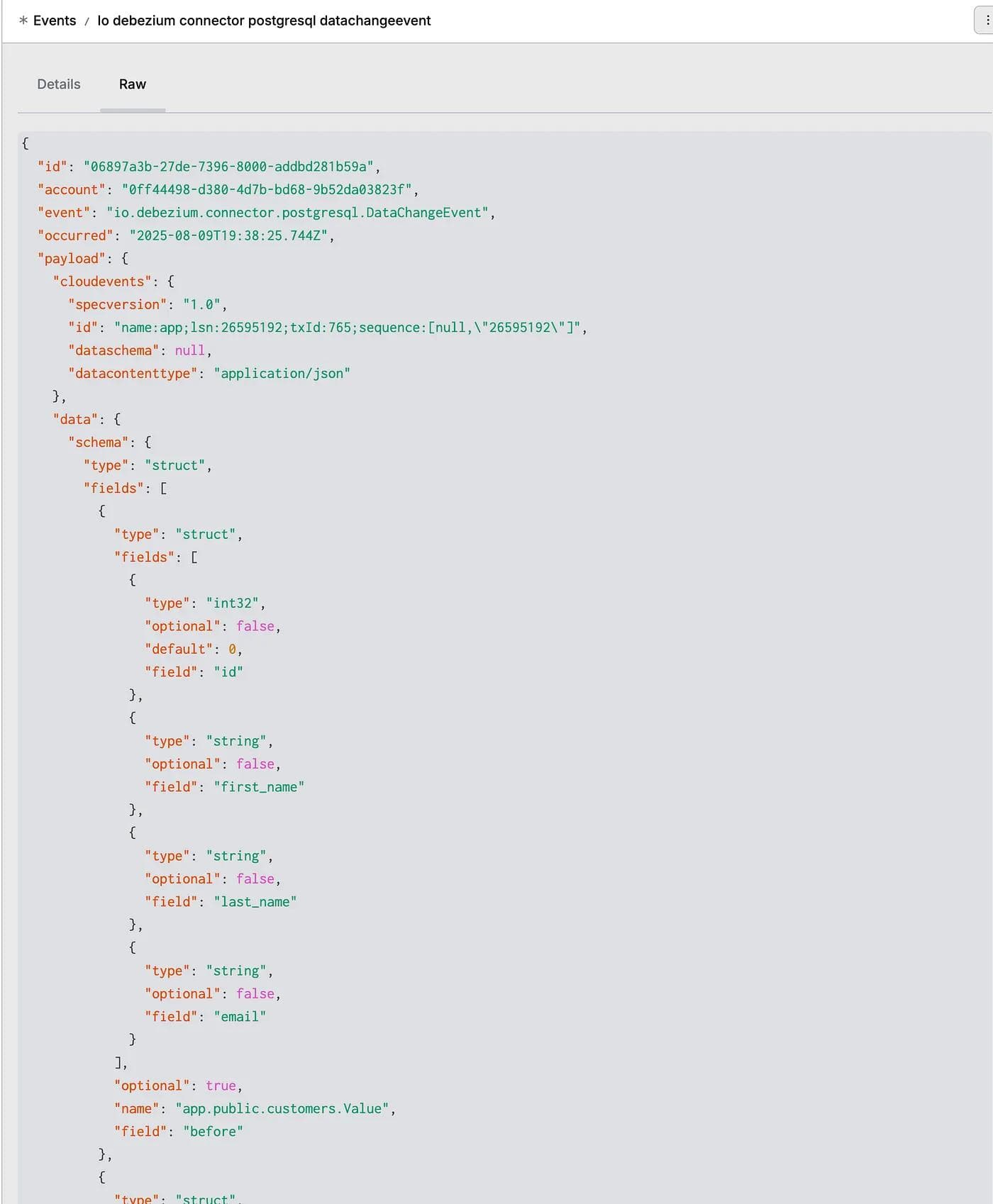

Inside the Raw tab in Prefect’s event inspector, the incoming CloudEvent is fully parsed into JSON, making it easy to inspect the entire payload and confirm the change details.

Here’s an example of the full CloudEvent payload received and parsed by Prefect:

{

"id": "06897a3b-27de-7396-8000-addbd281b59a",

"account": "0ff44498-d380-4d7b-bd68-9b52da03823f",

"event": "io.debezium.connector.postgresql.DataChangeEvent",

"occurred": "2025-08-09T19:38:25.744Z",

"payload": {

"cloudevents": {

"specversion": "1.0",

"id": "name:app;lsn:26595192;txId:765;sequence:[null,\"26595192\"]",

"dataschema": null,

"datacontenttype": "application/json"

},

"data": {

"schema": {

"type": "struct",

"fields": [

{

"type": "struct",

"fields": [

{

"type": "int32",

"optional": false,

"default": 0,

"field": "id"

},

{

"type": "string",

"optional": false,

"field": "first_name"

},

{

"type": "string",

"optional": false,

"field": "last_name"

},

{

"type": "string",

"optional": false,

"field": "email"

}

],

"optional": true,

"name": "app.public.customers.Value",

"field": "before"

},

{

"type": "struct",

"fields": [

{

"type": "int32",

"optional": false,

"default": 0,

"field": "id"

},

{

"type": "string",

"optional": false,

"field": "first_name"

},

{

"type": "string",

"optional": false,

"field": "last_name"

},

{

"type": "string",

"optional": false,

"field": "email"

}

],

"optional": true,

"name": "app.public.customers.Value",

"field": "after"

}

],

"optional": false,

"name": "io.debezium.connector.postgresql.Data"

},

"payload": {

"before": null,

"after": {

"id": 1,

"first_name": "Brendan",

"last_name": "Dalpe",

"email": "brendan.dalpe@prefect.io"

}

}

}

},

"received": "2025-08-09T19:38:26.492Z",

"related": [

{

"prefect.resource.id": "/debezium/postgresql/app",

"prefect.resource.role": "cloudevent-source"

},

{

"prefect.resource.id": "prefect-cloud.webhook.7b69ad6c-b49b-48b1-a0b0-320de001a8c5",

"prefect.resource.name": "debezium",

"prefect.resource.role": "webhook"

}

],

"resource": {

"prefect.resource.id": "/debezium/postgresql/app"

},

"workspace": "4aee2d55-a0c5-444a-b7ca-f6d19fc574f2"

}Triggering a Flow in Prefect through Automation

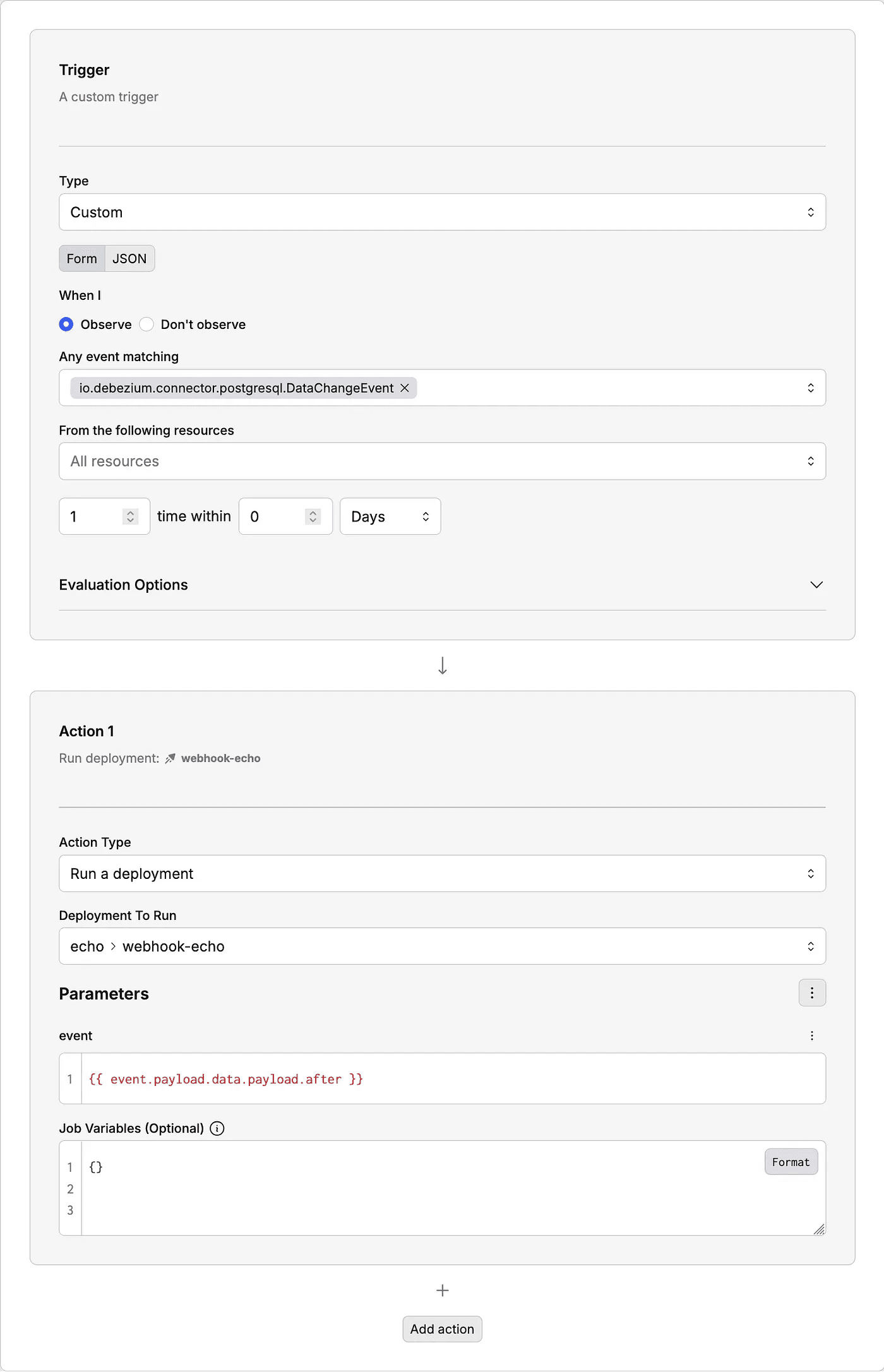

Let’s walk through a minimal example of using this event to trigger a Prefect flow. In this case, we’ll simply print the after section of the change event payload. The relevant portion of the event payload will be passed as a parameter to our workflow. Thanks to log_prints=True, the row data will appear directly in the Prefect UI.

We’ll use the Prefect Python SDK to configure the deployment and automation programmatically:

from pathlib import Path

from prefect import flow

from prefect.events import DeploymentEventTrigger

@flow(log_prints=True)

def echo(event: object):

print(event)

if __name__ == "__main__":

echo.from_source(

source=Path(__file__).parent,

entrypoint="main.py:echo",

).deploy(

name="webhook-echo",

work_pool_name="local",

triggers=[

DeploymentEventTrigger(

name="webhook-echo-trigger",

expect=["io.debezium.connector.postgresql.DataChangeEvent"],

parameters={

"event": {

"template": "{{ event.payload.data.payload.after }}",

"__prefect_kind": "jinja",

}

},

)

]

)When you run this script (python main.py), Prefect will automatically create:

- A Deployment named

webhook-echofor our demonstration. - An Automation named

webhook-echo-triggerthat listens for theDataChangeEventtype.

The __prefect_kind parameter tells Prefect to dynamically inject the event payload into the flow parameters at runtime.

Tip: For local testing, run a worker with:

prefect worker start --pool <process>Replace <process> with the name of your work pool.

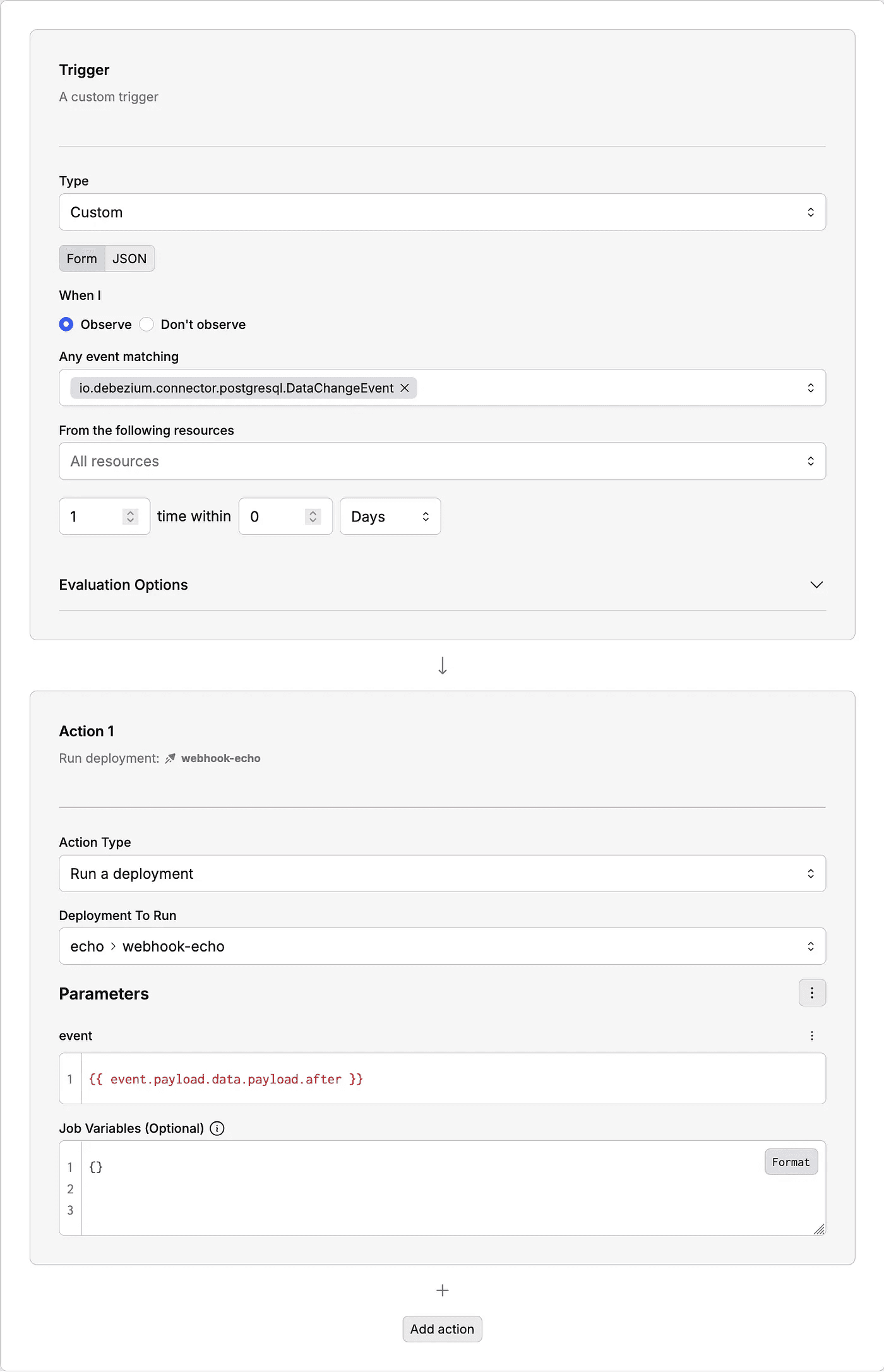

Automation created by the Python code

Automation configuration details

Testing the End-to-End Workflow

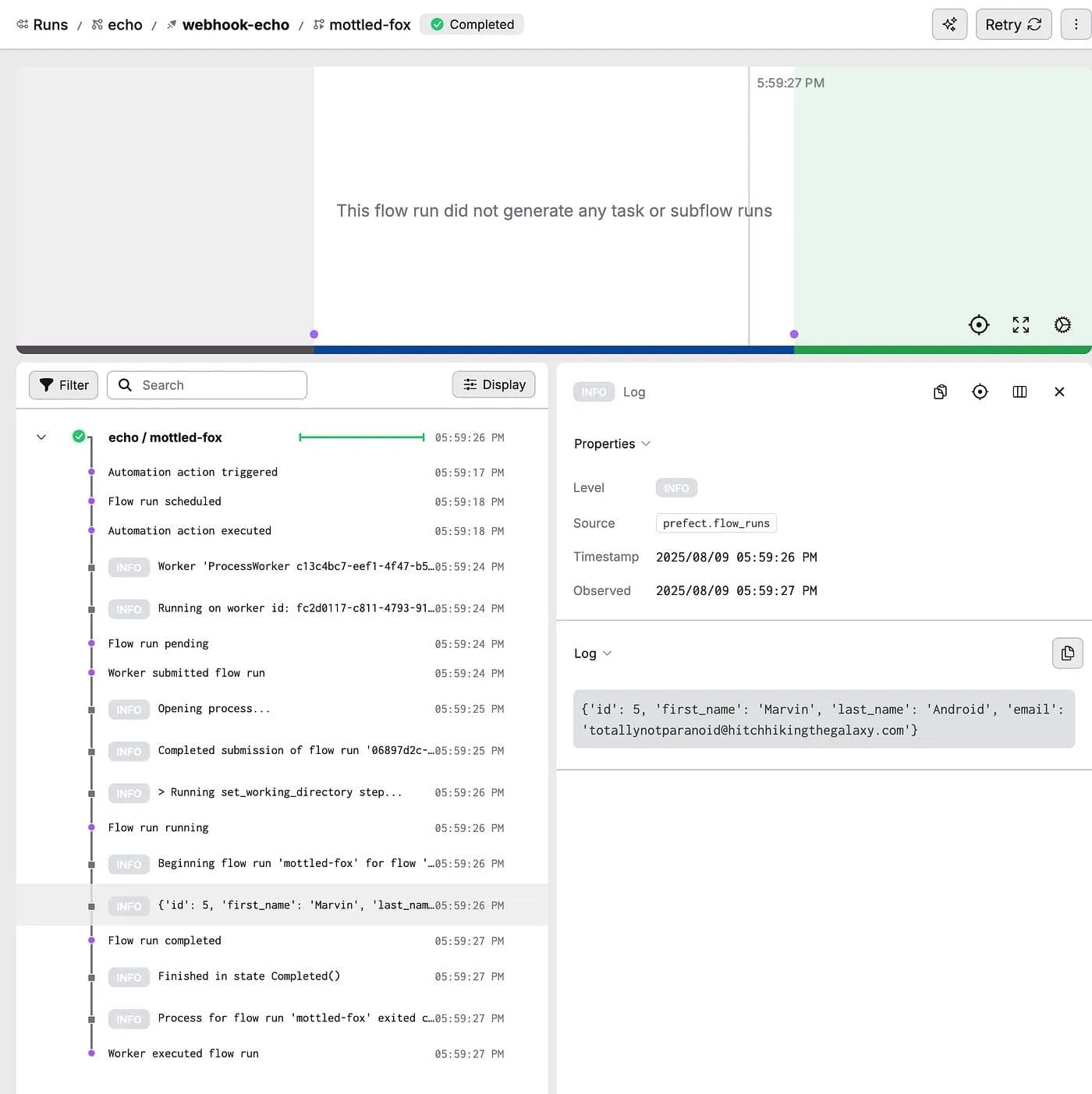

With the webhook in place, inserting another row:

INSERT INTO public.customers (first_name, last_name, email)

VALUES ('Marvin', 'Android', 'totallynotparanoid@hitchhikingthegalaxy.com');

will immediately trigger the automation. Within the Runs page of Prefect, you’ll see the new run along with the event data in the logs.

This completes an end-to-end pipeline: a database change flows through Debezium, is sent as a CloudEvent to Prefect via an HTTP webhook, and instantly launches an automated workflow using the actual row data. This was done without modifying the original application code.

Conclusion: Real-Time Without the Rewrite

By combining CDC capabilities with Prefect’s orchestration, you can:

- Modernize without rewriting

- Enable instant workflows

- Reduce workflow orchestration from minutes in batches to real-time in milliseconds

This approach allows you to breathe new life into existing infrastructure while unlocking the power of event-driven automation. Instead of costly, risky rewrites, you can extend the usefulness of your legacy systems, integrate them into modern architectures, and respond to changes in your data as they happen.

Your legacy systems don’t need to be replaced to remain competitive — they simply need to be connected. With Prefect as the automation engine, those connections become a seamless bridge between the past and the future of your data operations.

To learn more about Prefect:

- visit our website

- visit us on GitHub to open issues and pull requests*

- follow us on LinkedIn for regular updates

- *join our active *Slack community

Happy Engineering!