Data Pipeline Monitoring: Best Practices for Full Observability

You have a data pipeline that’s become critical to running your application - maybe even your business. How do you know at any given time that it’s running when it’s supposed to?

A robust data pipeline requires robust data pipeline monitoring. At a minimum, this requires three things:

- The right architecture to limit the scope of failures;

- Centralized logging and alerting for fast notification of failures and ease of debugging; and

- Encoding of dependencies to ensure minimum impact on stakeholders.

In this article, I’ll dig into the basics of robust data pipeline monitoring and cover some of the key practices you can implement now to keep everything running smoothly.

Robust data pipeline monitoring is key

When you first create a data pipeline, chances are you took a minimalistic approach. Maybe you wrote a Python script and stuck it in a cron job, with some logs spat out to a file sucked up by your monitoring platform. Even worse, perhaps it’s sitting in a cloud storage bucket without any eyes on it.

This may work on a small scale. But there are situations where this approach breaks down:

- Your pipeline is spread across multiple infrastructure components. For example, you’re using multiple data stores or data transformation platforms - how will cron manage those infrastructure pieces?

- You’re managing components across multiple platforms - e.g., pipelines spread across multiple cloud providers, on-premises, or across SaaS tools (or a mixture of all three!) - is ad-hoc scripting really the best way to run commodity extraction?

- Your pipeline is part of a dependency chain of upstream producers and downstream consumers, with components maintained by separate teams or partners - how will you build trust with those consumers through alerts?

In these complex environments, there are almost an infinite number of things that can go wrong. That makes it vital that you implement a robust monitoring solution.

But introducing a new piece of infrastructure brings its own challenges. How do you ensure that your monitoring infrastructure itself is robust? Done incorrectly, monitoring can just become another point of failure in your architecture. And how do you ensure you can react quickly - even automatically - to critical errors?

Components of data pipeline monitoring

This isn’t an easy feat. At a minimum, you need to stand up the following as robust services:

Instrumentation: Log production (to view errors) as well as metrics emissions (to measure the basic data pipeline monitoring metrics).

Collection and storage: Gathering both logs and metrics from sources (via log trawling, agents, direct API integration through an interface such as OpenTelemetry), coupled with storage and aggregation.

Observability: A dashboard on which to observe metrics, spot trends, and dig into logs. Observability is more than just monitoring: it’s the ability to see the state of your system on demand, find the root cause of issues easily, and get your system back up and running quickly in the case of failure.

Alerting: Defining metrics thresholds that trigger when a certain condition is reached (e.g., CPU utilization, time since last successful run). Remediation can take the form of either a manual alert to the on-call or an automated remediation action (e.g., auto-scaling a container cluster).

Building this all out yourself requires a ton of manual work, as well as the creation and monitoring of infra to ensure continuous, smooth operation.

Best practices for data pipeline monitoring

So those are the challenges. How do you meet them and deliver a robust data pipeline monitoring solution? The good news is that it’s not as hard as it might seem to get the right if using the right tools to help. Follow a few basic principles:

- Avoid monolithic architecture

- Make dependencies explicit

- Define clear metrics and thresholds

- Log and alert centrally

- Use reliable monitoring infrastructure

Let me hit each of these in detail.

Avoid monolithic architecture

It’s a common problem. Your pipeline’s stopped working. And the problem lies…somewhere.

Legacy monolithic applications are often hard to debug. They’re usually highly stateful, with all of their components running tightly coupled in a single architectural tier. This makes it difficult to pinpoint where exactly a failure occurred. And often, they’re hard - if not impossible - to run locally or to debug directly.

To avoid this problem, break a large data pipeline into individual components that interact with one another through explicit interfaces. In a data pipeline, this usually means breaking ingestion, transformation, storage, analysis, and reporting into their own modularized components. Then, monitor each component separately.

This way, if you experience an error in (for example) the ingestion portion of the pipeline, you can see clearly in your monitoring that the issue comes from this component. That eliminates the other 60-80% of your pipeline and immediately narrows your focus on the problematic portion. Furthermore, you can prevent this error from propagating to other parts of your architecture - so those data consumers are less impacted.

💡 TLDR: Breaking up your pipeline into distinct services helps reduce fragility.

Make dependencies explicit

A complex pipeline that’s part of a larger architecture is even harder to debug than one that runs in relative isolation. For example, if your refund reconciliation report depends on a Quickbooks API export in Fivetran that’s breaking, you may spend days tracking down an error that isn’t even your team’s fault.

The explicit dependencies principle says that all of a process’ dependencies should be encoded in its public interface. This is as good an idea (maybe even a better one) for data pipelines as it is for classes and packages in code.

In a data pipeline, every component should be explicit about its inputs and outputs - the data it expects to receive and the format of the data it outputs. You can accomplish this in a number of ways:

- Clearly document all your data formats. This can be done via JSON schemas, OpenAPI specifications, code comments, and/or external (and easy-to-find) documentation.

- Enforce all data preconditions and postconditions using packages like Pydantic. If you expect a field coming from a source to be an integer, make sure it’s an integer - and stop the pipeline run if it fails that test.

Part of making dependencies explicit is emitting clear error messages on failure. In the case of the data type failure above, if you blindly pass it on without testing it, you can spend minutes or even hours tracking down an obscure error message emitted by a random Python method. Instead, test your data and log an explicit error message detailing what the problem data is and what precondition it failed.

💡TLDR: Programmatic dependencies through code reduce debugging time.

Define clear metrics and thresholds

A lot of times, you may not notice you have a data issue until someone tells you a report that the business depends on “looks off”. This is usually due to a failure to define clear metrics and thresholds for important aspects of your data pipeline.

At a minimum, make sure to capture and define thresholds for the following data pipeline observability metrics:

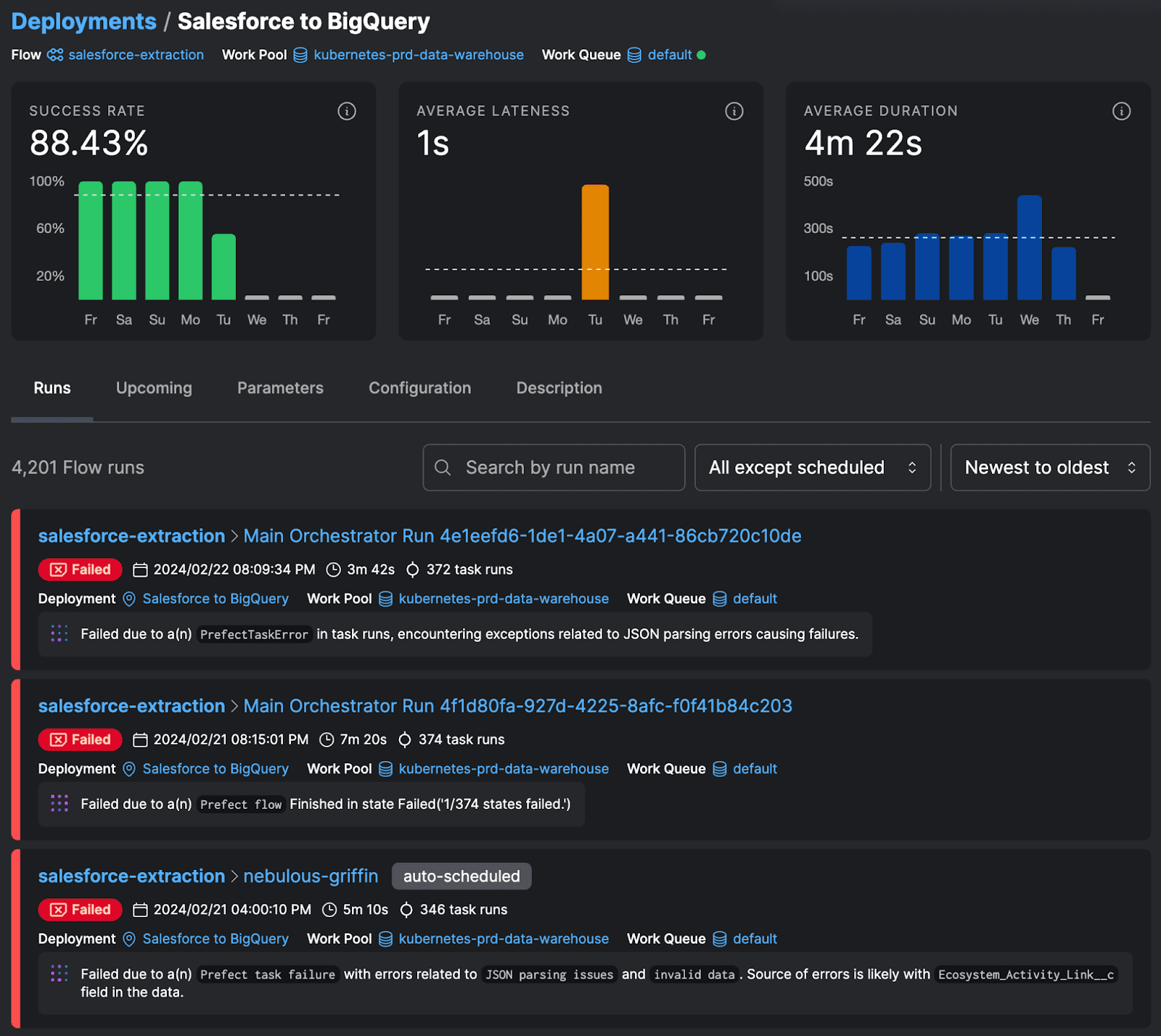

- Consistency: Success rate - when was the last time your data pipeline ran to completion? How long can it go without a successful run before it’s officially “A Problem”?

- Timeliness: Duration and latency - How long does it take your data pipeline to run? Is that time increasing or decreasing? What do you consider “too long” (or “too short”)?

- Validity: Failure types - If your pipeline fails occasionally, why does it fail? Is that an expected error (e.g., a remote API that’s temporarily unreachable)? Or is some upstream process sending bum data on every third batch? This is where observability - the ability to see exactly what’s happening in your pipeline at any time, for any run - comes into play.

You should also have a handle on system-level metrics for your data pipeline infrastructure. How much compute do you require to run a pipeline? How much is it costing you?

A data pipeline monitoring solution like Prefect will not only show you this data - it’ll enable you to issue alerts if anything goes out of range. For example, if that pipeline that typically takes 10 minutes takes an hour, you can generate and dispatch an incident to your team’s on-call. You can even program in more complex behaviors, such as creating an interactive workflow that routes certain runs (e.g., large data processing jobs that may require expensive compute) for manual approval.

For instance, the Prefect deployment view enables observing key metrics for your data pipelines and workflows. You can click on any run to see what failed and why.

💡TLDR: Clearly calculated pipeline metrics ensure everyone is on the same page about what failure is or is not tolerable and prevents alerting fatigue.

Log and alert centrally

The problem with a data pipeline whose components are all over the place is that…well, they’re all over the place. And so are their logs and alerts.

Did your data pipeline fail because your AWS Lambda function failed to find a file in S3? Or because of an error with a dbt transformation? Or because the Docker container containing your machine learning training algorithms encountered an unexpected null value? In this case, pinning down the culprit may require tailing logs across half a dozen or more independent systems.

To overcome this issue, ship logs and metrics to a single location for your entire end-to-end data pipeline. Use some method - e.g., a uniquely generated UUID - to tag a run so you can trace it throughout all the components of your architecture. Whenever possible, work with upstream producers and downstream consumers to integrate this solution in their components as well. That way, you only have to look at a single dashboard to find what failed and why.

💡TLDR: Centralized logs reduce the time required to fix failures.

Use reliable monitoring infrastructure

As I said, building all of this out yourself is a pain. It’s undifferentiated heavy lifting with no direct relation to your business - so why spend engineering hours building and maintaining monitoring infra? It’s especially painful if you’re managing multiple pipelines spread across on-prem, cloud, and SaaS hosted services.

However, let’s say you do take the time to build it out. The question is: what happens if the monitoring infra itself fails? Your monitoring is now a significant point of failure in your architecture. If it goes down and critical business processes fail, you could find yourself left in the dark.

We built Prefect to manage exactly this pain point for data pipelines and workflows. Prefect is a workflow orchestration platform that enables monitoring background jobs across your infrastructure. With Prefect, you can encode your workflows as flows divided into tasks, using Python to orchestrate and manage components.

Using Prefect, you can implement all the high points of a reliable data pipeline monitoring solution:

- Avoid a monolithic architecture by dividing your workflows into flows and tasks. Then, chain multiple workflows together and run sub-flows to implement complex workflows that are well-partitioned and easy to debug.

- Make dependencies explicit automatically through the flow and task decorators, while enforcing schemas with Pydantic (which comes built into Prefect from the ground up).

- Rely on reliable, scalable monitoring that lives where your workflows live and can react to failures with backup processes.

- Define clear metrics and thresholds, including custom metrics, from the Prefect dashboard. See the status of all your workflows at a glance.

- Centralize all workflow alerting and logging in Prefect, giving all engineers and SREs a single place to pinpoint and debug issues quickly. Use Prefect’s rich logging support and AI summarization technology to automate generation of detailed error messages with Prefect + Pydantic.

- Let Prefect handle the monitoring infrastructure for you - including managing compute for your backfill tasks - so you can focus on the code that’s relevant to your business.

Major League Baseball’s Washington Nationals used Prefect for just this purpose to consolidate data from multiple APIs. They used Prefect’s centralized monitoring, not only to see when errors happened, but to implement automated responses to some of their most common issues. This gave the Nationals full observability into all of their pipelines, no matter whether the code ran directly on Prefect, in the cloud, or on the team’s legacy systems.

See how Prefect can bring you greater observability and monitoring for yourself - sign up for a free account and try out the first tutorial.