Data Validation with Pydantic

Let’s be honest, shall we? There’s a reason lots of us put off writing data validation code. It’s not exactly the most glamorous part of programming.

However, data validation is an absolute necessity. At best, not having it leads to a poor user experience, as users try to make sense of strange and confusing errors. Like the time I was working on a Microsoft ASP application back in the day and got a message in the Event Log stating “the data is the error.” (Thanks, Microsoft.)

At worst, data validation errors can lead to runtime errors that take down entire subsystems. That can lead to full-blown downtime and loss of revenue. Implementing data validation from scratch is time-consuming. Done poorly, it makes code even harder to read and debug.

That’s why, at Prefect, we’re big fans of leveraging Pydantic to implement data validation. In this article, I’ll show how performing data validation with Pydantic reduces overhead, makes your systems more resilient, and even improves the legibility of your code.

Why data validation with Pydantic?

Consider background tasks integral to your architecture that run like services. These may run on a schedule or as event-driven tasks - e.g., webhooks triggered by a post to a Kafka topic, or REST calls to an API with an arbitrary JSON body. Validating this data before further processing and storage is critical if you want to avoid application errors and crippling data quality issues.

But like any testing framework, writing data validation code is time-consuming. It often requires writing custom validation code and establishing conventions for your teams to follow.

Due to the overhead, many developers and teams don’t write data validation code at all. Those that do often do it each in their own special way - and, often, without thinking about code reuse. The result is duplicative data validation code that obscures the meaning of the underlying logic rather than reinforces it.

The bottom line is, if your application has unit tests, it needs data validation for the same reasons. Unit tests ensure your application can gracefully handle any situation the real world throws at it. Data validation enforces and documents your application’s assumptions and constraints at runtime.

This is where Pydantic can help, big time.

If you’re new to Pydantic, you can read my previous article as a backgrounder. In a nutshell, Pydantic provides a framework for data validation for Python applications that’s 100% Pythonic. It’s an indispensable tool for validating data supplied by end users or outside systems - i.e., data that may or may not conform to your system’s definition of “properly formatted”.

Using Pydantic’s BaseModel class, you define model classes that represent your data’s fields and their desired type. Pydantic validates that your data conforms to these types (or can be coerced into these types), providing a level of type safety when handling arbitrary data.

For example, let’s say you have an object representing a customer with two fields: a numeric ID and a field designating whether or not the customer is active. You can represent this by defining a model for Customer as follows:

from pydantic import BaseModel, ValidationError

class Customer(BaseModel):

id: int

is_active: boolThen, you can create some data for your model: data = {"id": "123", "is_active": "true"}

Finally, you can create an instance of your Customer class and see if your data is valid:

try:

cust = Customer(**data)

except ValidationError as e:

for error in e.errors():

print(f'{error["input"]} - {error["msg"]}')If you run this code, you’ll see it completes without an error. But what if you change your data to the following? data = {"id": "123.1", "is_active": "true"}

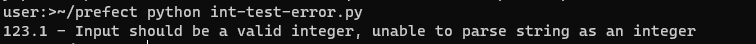

If you run this, Pydantic will throw an error, because you’ve attempted to pass a string decimal to an integer field:

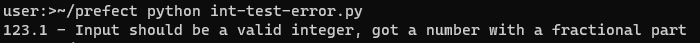

You’ll also get a similar error if you attempt to pass id as an actual Python decimal value: data = {"id": 123.1, "is_active": "true"}

Pydantic ships with a small army of built-in validators for a wide number of types. These include simple data types, like int and date, and complex types such as e-mail addresses, UUIDs, typed dictionaries, sets, and unions. This usually means that you can fulfill the majority of your data validation needs with very few lines of code.

Once you’ve defined Pydantic your models, you can use - and reuse - them across your codebase. This reduces duplication and enforces a consistent approach to data validation across developers and teams.

Pydantic data validation: One thing to keep in mind

Before I delve more deeply into our example above, there’s one important thing to keep in mind.

While I keep talking about Pydantic and data validation, Pydantic is technically a data parser. In other words, when you declare a type in Pydantic and load data into it, Pydantic will attempt to coerce whatever data you give it into that format.

Let’s take a simple example. Say you create a Pydantic model for a customer with a field, is_active, that represents whether the customer is an active customer or not.

from pydantic import BaseModel, ValidationError

class Customer(BaseModel):

isActive: boolNow, let’s say you pass the following data: data = {"is_active": "true"}

Next, try and load this data. If this data doesn’t have any issues, Pydantic will run without output. If it encounters an error loading it, it’ll throw a ValidationError, which we’ll catch and print out.

try:

cust = Customer(**data)

except ValidationError as e:

for error in e.errors():

print(f'{error["input"]} - {error["msg"]}')If you run this code, it doesn’t throw an error because Pydantic can convert the string “true” to a boolean value. Pretty uncontroversial so far.

But what happens if you pass the following data? data = {"is_active": "yes"}

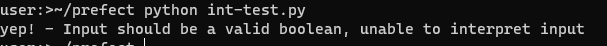

It turns out this also works! Pydantic converts “yes” into a boolean true and “no” into a boolean false. There are limits to Python’s flexibility, of course. If you try and use slang, you’re not gonna get very far: data = {"is_active": "yep!"}

In this case, Pydantic tells us to come back later once we have better data:

For most applications, this behavior is fine and even desirable. But you should be mindful this is what Pydantic is doing, as there may be occasions where you don’t want this behavior. Fortunately, you can change how Pydantic does parsing by using strict mode. For example, we could change our Pydantic model as follows:

from pydantic import BaseModel, Field, ValidationError

class Customer(BaseModel):

is_active: bool= Field(strict=True)If you run this with is_active set to yes, Pydantic will throw an error. In fact, with strict on, it won’t accept any string value - you’ll have to pass an actual Python boolean type: data = {"is_active": True}

Example: Data validation with Pydantic

Let’s expand our example above. After all, a customer with just an int and a bool isn’t a very interesting customer. Let’s say our customer needs these fields as well:

- A UUID that acts as a globally unique identifier for the customer across the company (we can assume the integer ID is a local database auto-increment or perhaps a legacy value)

- An IP address representing the address of the computer from where the customer last logged in

- The customer’s e-mail address

Assume that you’re writing code to take a customer record for an inactive customer so that you can scan their account history and see if there are any special deals or offers you can e-mail them to get them to re-visit your site. Assume this runs from a database trigger to a webhook that we’ll publish using Prefect after x days of inactivity.

Want to hear something fun? Implementing this in Pydantic is really easy. All we need to do is change the code for our model to include these lines:

class Customer(BaseModel):

id: int

is_active: bool

guid: UUID

email: EmailStr

lastIPAddress: IPvAnyAddressPydantic uses Python’s built-in UUID type for data validation. By leveraging this and Pydantic’s EmailStr and IPvAnyAddress types, we can add additional validation to our model with just three lines of code.

We can then test our new model with some sample data:

data = {

"id": "123",

"is_active": "false",

"guid": "4ca4604d-c1c6-454e-aa8b-306041792031",

"email": "jane@domain.com",

"lastIPAddress": "10.0.0.1"

}

try:

cust = Customer(**data)

except ValidationError as e:

for error in e.errors():

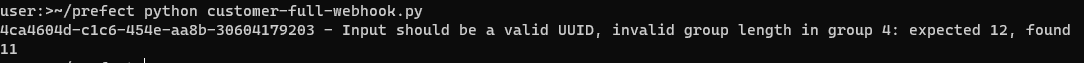

print(f'{error["input"]} - {error["msg"]}')The call to create a Customer object will run without error. If you somehow lose a digit on the UUID, however, you’ll get a failure: "guid": "4ca4604d-c1c6-454e-aa8b-30604179203"

Using data validation in Pydantic with Prefect

To ship this code as a webhook, we can deploy it using Prefect. Prefect’s a workflow orchestration and observability platform that provides a centralized, consistent approach to running scheduled and event-driven workloads in the background.

To deploy this as a Prefect, you’ll want to package it as a flow. I described how to do this in our previous article on Pydantic - you can look there for information on how to set up Prefect for local development and the mechanics of creating a flow.

You can designate any function in Python as a flow by using Prefect’s @flow decorator. Even better, since Prefect supports Pydantic out-of-the-box, you can use your Pydantic models as arguments to your flows. Prefect will automatically parse the JSON it receives from a call to your flow (e.g., via a webhook) into your model type.

To turn the above code into a flow, then, you need only create a Python function with a @flow decorator and designate that it takes an argument of type Customer:

@flow

def contact_customer(data: Customer):

# Do work

returnThe full code (with Prefect library imports) would look like this:

from pydantic import BaseModel, ValidationError, EmailStr, IPvAnyAddress

from uuid import UUID

from prefect import flow

class Customer(BaseModel):

id: int

is_active: bool

guid: UUID

email: EmailStr

lastIPAddress: IPvAnyAddress

@flow

def contact_customer(data: Customer):

# Do work

return

if __name__ == "__main__":

contact_customer.serve(name="contact_customer_deployment")If you run this code, the line in __main__ will create a Prefect deployment that awaits run requests. Now you can wire this flow to a Prefect webhook and run it from any external process via an HTTP request.

Final thoughts

Using Pydantic with Prefect, you can create observable workflows callable across your entire infrastructure while ensuring high data quality. To try it out yourself, sign up for a free Prefect account and create your first workflow with Pydantic.