dbt Coalesce 2024 Takeways

dbt Coalesce 2024 Takeways

Did you miss out on dbt’s Coalesce conference this year in Las Vegas? Maybe you’re looking for a quick write-up on some of the standout sessions from the show. You’re in luck! In this post, I’ll outline a few of my favorite sessions from Coalesce and share what I learned.

And if you’re still craving more dbt content, you’re in for a treat—Prefect has an exciting event coming up in collaboration with dbt. We hope to see you there, and in the meantime, let’s dive into the Coalesce highlights!

dbt Core: Our Love Story

The open-source community is part of what makes the data world special. dbt develops dbt Core, their open source tool, along with dbt Cloud. Grace Goheen from dbt used this session to renew her vows with dbt Core and describe how dbt will continue to build its relationship with the community (unfortunately, there wasn’t an Elvis impersonator officiating).

As an open-source tool, it can be challenging to prioritize what to build next. It’s impossible to know all the users of your tool, let alone speak to them directly. Grace outlined how the dbt team decides on new features. The process starts by looking at requests on GitHub. The team doesn’t ignore feedback from other channels like Slack, but they prefer when feedback comes through GitHub—a common theme among open-source tools, including Prefect.

Once a feature is proposed on GitHub, the team initiates a discussion with the community to see if the feature can be addressed through pull requests (PRs) from community members. If it can, then the team deprioritizes it internally. However, sometimes a feature requires changes to dbt itself to function properly. In such cases, the team will discuss it internally before moving forward.

After deciding on a solution, they communicate their ideas with the community on GitHub and seek additional feedback that may have been overlooked. The team then develops the solution and releases it.

This feature release cycle ensures that the community is involved throughout the process and that the team builds features that are essential to the users.

Grace also shared a couple of priorities for dbt Core in the near future: support for the Iceberg table format and the ability to limit data to time-based samples, such as the last 3 days of data.

Surfing the LLM Wave

A Coalesce session on LLMs and AI entering the data world may sound like old news. Thankfully, Amanda and Erika from Hex took a novel approach in their session. While they acknowledged that the wave of LLMs crashing into the data world is inevitable, they emphasized that data professionals can position themselves to lead the change rather than be swept away.

Their game plan boiled down to three main points: “Be a partner, not a roadblock,” “What’s good for LLMs is good for humans,” and “Know your levers for accuracy.”

We’ve all seen headlines touting LLM models with accuracy levels of 70% or higher. While that sounds great, anyone who’s worked with LLMs knows that real-world performance rarely matches those numbers. In their tests with around 90 typical customer queries, Hex’s LLM scored just 30% accuracy.

This is where data professionals step in. The team used three strategies to improve their model’s performance:

Datasets

Just like a new employee getting on-boarded, LLMs can be overwhelmed by the number of tables in a dataset. To help, you can remove irrelevant tables and endorse the ones the model will actually use. This helps the model focus on learning the most important data and boosts accuracy.

Documentation

Remember starting your first data job and struggling to understand both the data and the business logic behind it? LLMs face the same challenge. Unlike humans, though, they don’t know to send a Slack message to their coworker when they need help..

We can help LLMs (and ourselves) by providing thorough documentation, explaining what fields mean, and giving them the business context they need to make better decisions.

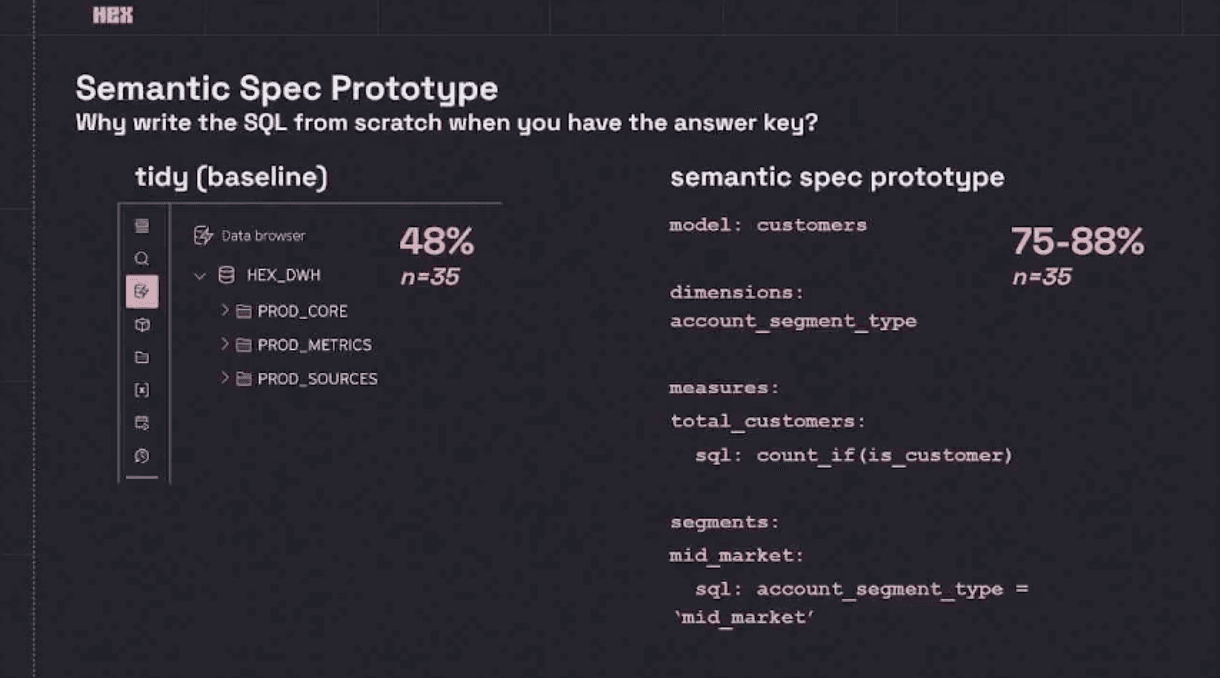

Semantic Layer

Defining a semantic layer for your LLM models (and your team) is another step toward improving accuracy. Clear, precise definitions of calculations and segment names provide even more clarity. The Hex team found that defining semantics increased LLM accuracy by around 35%.

As you can see from the tips above, the steps that you can take to help your LLM models will also help to provide clarity for your current and future team members. Who can argue against helping our human and AI friends?

Accelerate Analytics Workflows with dbt Copilot

Speaking of AI, dbt announced a new feature at Coalesce: dbt Copilot. If you’re familiar with GitHub Copilot, you probably have an idea of what this tool does. Simply put, dbt Copilot helps you generate code in dbt through an LLM, whether you’re developing, testing, or analyzing.

Develop

The most predictable way dbt Copilot helps is by generating code from natural language prompts. This makes dbt more accessible to users with less technical experience, allowing them to self-serve data.

Interestingly, the documentation feature of dbt Copilot is equally impressive. With a single click, dbt will generate a semantic model and documentation for your data. While this auto-generated documentation might not include all your specific business logic, it will get you 70–80% of the way there, saving you time.

Test

Ask any data professional and they’ll tell you that testing is crucial, but dbt’s statistics show that only 50% of users test their data models. As a former teacher, I understand the resistance to writing tests, but we can do better! Luckily, Copilot can generate data tests with a single click. In the future, it will even support unit test generation.

Analyze

dbt Copilot also allows stakeholders to ask data questions in natural language and get answers directly. This capability is powered by the dbt Semantic Layer, which Copilot helps you create.

Future Plans

dbt Copilot is a tool that will continue to be developed. In the near future, the Copilot team has two objectives: Allow users to bring bring LLMs other than OpenAI’s models and allow users to have a style guide that Copilot will follow.

Don't panic: What to do when your data breaks

Have you ever woken up to phone calls, text messages, and Slack notifications from co-workers and your boss, all about something going wrong? I may still have some PTSD from a few of those situations…

Matilda Hultgren from Incident.io presented a plan to help prevent that panic from setting in. In short, we should handle data problems the same way software engineers handle theirs.

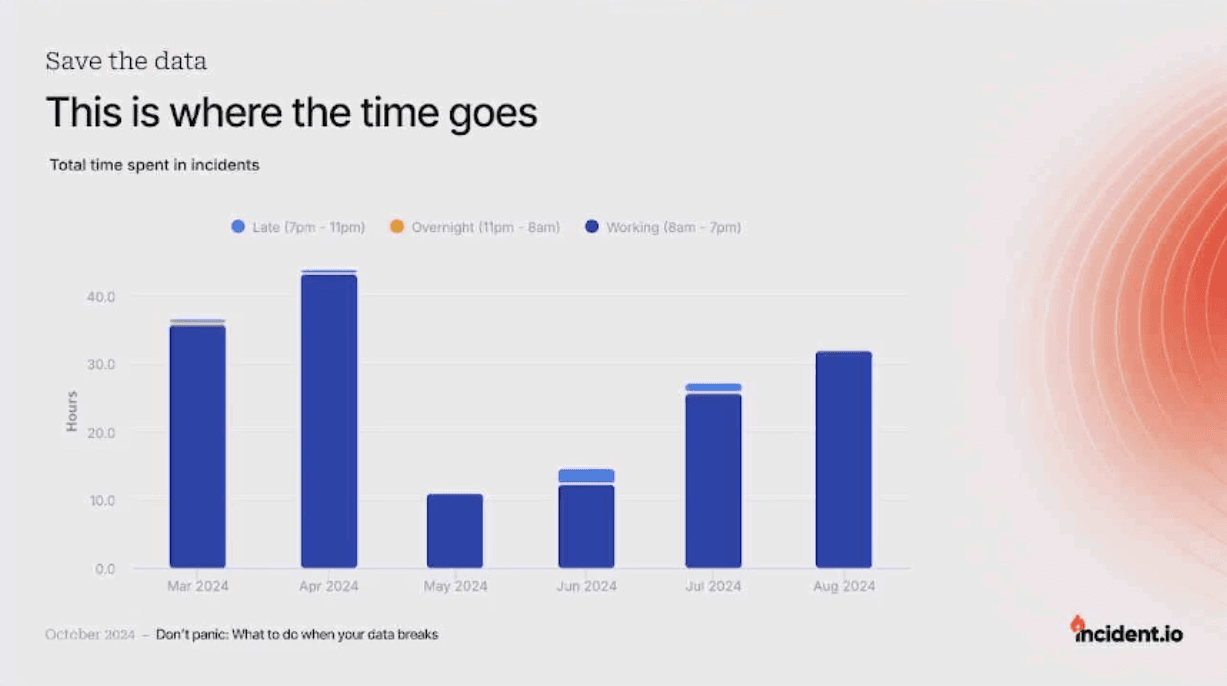

Treat Everything like an Incident

Her first step is to treat every data issue like an incident, whether it’s a dashboard displaying bad data or a major pipeline breaking. If everything is treated as an incident, your team will have a process in place to begin fixing the problem, and the entire organization will have transparency on the issue. This approach also allows you to gather data on how much time and effort is being spent on incidents overall.

Establish a responder rota

Matilda suggested a new position for data teams: the Data Responder. Depending on the size of your organization, this could be a rotating role with someone “on-call,” or in larger organizations, a dedicated person focused solely on triaging issues as they arise.

The data responder’s responsibility is to assess the situation, determine the severity of the problem, and escalate it to the appropriate team. Their job isn’t to fix the issue themselves but to stop the initial panic and establish guidelines for who should handle the fix and what priority should be assigned.

Create a Dedicated Space

Once the responder identifies the issue, the next step is organizing the communication. Matilda recommends creating a dedicated Slack channel for each incident, regardless of its size. These channels can even be automatically generated through API calls to make the process smoother.

In this space, the data responder can coordinate conversations, ensure all details are outlined, and help keep communication focused. This prevents cluttered discussions and ensures incidents are addressed, not forgotten.

An orchestration tool like Prefect can help keep your incidents in a shared space that easily allows you to track and manage them as they happen.

Save the Data

We’ve all had that conversation with our boss: “I couldn’t finish my work this week because bug fixes took priority.” With the data gathered from this new incident process, you can back up those claims by outlining exactly which incidents took up your time. It’s a great way to “put your money where your mouth is.”

As you can see, dbt Coalesce 2024 offered an incredible range of insights, from the evolving role of open-source tools to the growing impact of AI on data workflows. Whether it’s prioritizing features with community input or leveraging LLMs to enhance accuracy, the future of data engineering is filled with exciting possibilities. And don’t forget, the conversation doesn’t end here—Prefect’s upcoming event with dbt will dive even deeper into these trends. I hope to see you there!