Introducing Prefect Workers and Projects

Workflows exist in the nebulous ether between a “script” and an “application.” In fact, workflows often start out as something more like a script and become something more like an application over time. Prefect embraces this, enabling you to run something as simple as a single function, or as complex as massively parallel workflows with thousands of tasks. Prefect can support this wide range of uses because, like Python itself, it is flexible. This flexibility presents a challenge: the tools and processes that a modest script demands are different than those of a full-blown application.

Prefect 2 introduced the concept of a deployment, which encapsulates everything Prefect knows about an instance of a flow, but getting flow code to run anywhere other than where it was written is tricky — a lot of things need to be in the right place, with the right configuration, at the right time. Deployments often have critical, implicit dependencies on build artifacts, such as containers, that are created and stored outside of Prefect. Each of these dependencies presents a potential stumbling block when deploying a flow for remote execution — you must satisfy them for your flow to run successfully.

Today, Prefect is introducing workers and projects in beta to address this challenge.

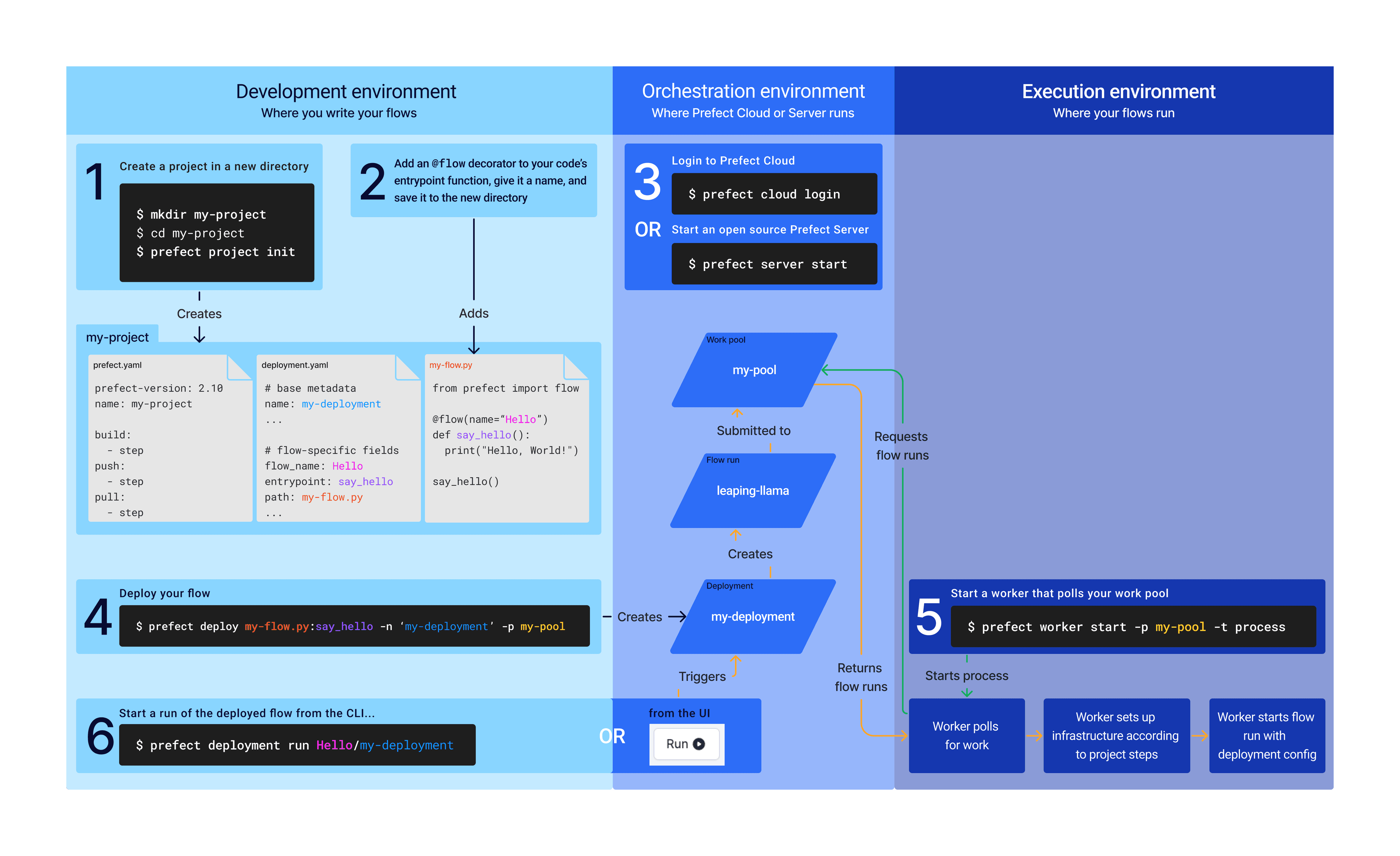

Workers are services that run in your desired execution environment, where your flow code will run.If you’re already familiar with Prefect, you can think of workers as next-generation agents, designed from the ground up to interact with work pools. Each worker manages flow run infrastructure of a specific type and must pull from a work pool with a matching type. Projects are a contract between you and a worker, specifying what you do when you create a deployment, and what the worker will do before it kicks off that deployment. Together, projects and workers bridge your development environment, where your flow code is written, and your execution environment, where your flow code runs.

Projects: the package and procedure

A project is a directory of files defining one or more flows, deployments, Python packages, or any other dependencies your flow code needs to run. If you’ve been using Prefect, or working on any non-trivial Python project, you probably have an organized structure like this already. Prefect projects are minimally opinionated, so they can work with the structure you already have in place and with the containerization, version control, and build automation tools that you know and love. The beauty of projects as directories is that you can make relative references between files while retaining portability. We expect most projects to map directly to a git repository. In fact, projects offer a first-class way to clone a git repository so they can be easily shared and synced.

Projects also include a lightweight build system that you can use to define the process for deploying flows in that project. That procedure is specified in a new prefect.yaml file, in which you can specify steps to build the necessary artifacts for a project's deployments, push those artifacts, and retrieve them at runtime. The prefect.yaml defines a series of steps in three groups:

Recipes

Because projects are directory and process aware, they enable exciting new ways for Prefect to help you get started. Prefect now ships with recipes — project templates for common tools and their corresponding deployment patterns. If you are using Git to version control your code and Docker to containerize it, for instance, you can set up a fresh directory with the docker-git recipe, which offers the best-practice project directory structure and steps for working with Git and Docker. Soon, you will even be able to create and share your own recipes.

Workers and work pools: pulling and preparing

A project’s steps are attached to every deployment created with that project. That deployment also specifies a work pool — an interface to an execution environment where the flow will run. Work pools can be assigned a specific infrastructure type — Kubernetes, Docker, etc. They expose rich configuration of their infrastructure. Every work pool type has a base configuration with sensible defaults such that you can begin executing work with just a single command.

With work pools, infrastructure configuration is fully customizable from the Prefect UI. For example, you can now customize the YAML payload used for run flows on Kubernetes — you are not limited to the fields exposed in the Prefect SDK. Prefect provides templating to inject runtime information and common settings into infrastructure creation payloads. Advanced users can add custom template variables which are then exposed the same as Prefect's default options in an easy to use UI.

Work pools can have many workers — background services that regularly poll their corresponding work pool for flow runs to be executed. Just before kicking off each flow run, workers set up the infrastructure that the deployed flow needs and execute the project pull steps. If the work pool’s default configuration is updated, all workers automatically begin using the new settings. You no longer need to redeploy your agents to change infrastructure settings. For advanced use cases, you can override the base config on a per-deployment basis.

Putting it all together

You can think of Prefect Cloud, or your self-hosted Prefect Server, as running in an orchestration environment sitting “in-between” your development environment and your execution environment. Your development process starts with a flow — usually a single .py file on your laptop. With a project, you can deploy that flow, and the information it needs to run, to a work pool — a representation of your execution environment — where it will be picked up by a worker. With projects and workers, you can go from a blank slate to a deployment running in a remote environment with just a few commands.

Try it out for yourself! Create your first Prefect project by following this tutorial.