Monitoring Serverless Functions

If a tree fell in the forest and no one was there to see it, did it really fall?

Yes, especially if it blocked the path of a trail that hikers discovered just days later. It would’ve been much better if the fallen tree was removed from the trail before it created an impasse.

As data or platform engineers, we’ve all either sent or received a Slack something like this.

The tree, just like those serverless functions, wasn’t observed. However, it created an issue down the road. If you expect your serverless functions to fire and they don’t, you’re going to find out eventually. And when you do your digging, you may figure out that now there’s a large backlog of unprocessed data that might be lost, stale, or simply unused.

Unused data is an issue when there are downstream dependencies. Consider a serverless functions to take new user signups and send them an entry level email. Or more critically, a function that processes new transactions to alert the risk team of potential fraud.

Unmonitored cloud functions can have detrimental consequences.

Lambda and cloud function observability: the key elements

The TL;DR is: just like you have code tests, you should ensure your infrastructure has tests too. Any piece of infrastructure has expectations on it: its uptime, availability, latency, freshness, etc. That is no exception for serverless functions on GCP (Cloud Functions), AWS (Lambda), and Azure (Azure Functions). If you’re curious about the details, read more about deploying serverless python functions here.

Ensure your functions meet your expectations by:

- Knowing how often a function runs. This piece of information will allow you to act if a function has not run in an alarming amount of time (like 3 days).

- Alerting when a function fails. Errors happen. Some are critical, some aren’t. Surface logs and errors in a communication channel that is easily accessible to a broader team, which usually means not just keeping logs in CloudWatch.

- Trigger functions from a central platform. This is not always possible with serverless functions as-is. If you route function invocations through a central platform, you not only have visibility into your expectations but also how your functions interact with the rest of your system and manage dependencies.

Process events through Prefect Cloud: the setup

A function invocation can be represented as an event. Understanding what is happening with your infrastructure at any given point in time and acting on it—which you can call operational intelligence—is imperative to have as both a full-stack or data-specific platform team.

One way to have operational intelligence is to route events through a platform that will help you observe them, know about the important ones, and largely become—well, intelligent—about your infrastructure operations. Prefect Cloud can do many things, but let’s focus on these three: orchestrate your workflows, process events, and observe all that behavior.

Thinking about your serverless functions as a piece of a robust workflow application allows you to benefit from centralized dashboards, APIs, and event streams. Unlike traditional workflow orchestration systems, Prefect has an event-first architecture that works well with event processing from serverless functions.

Applying this application view to Lambda or Cloud Functions, first we will setup a place to ingest events into Prefect Cloud, then run an action if an alarm occurs.

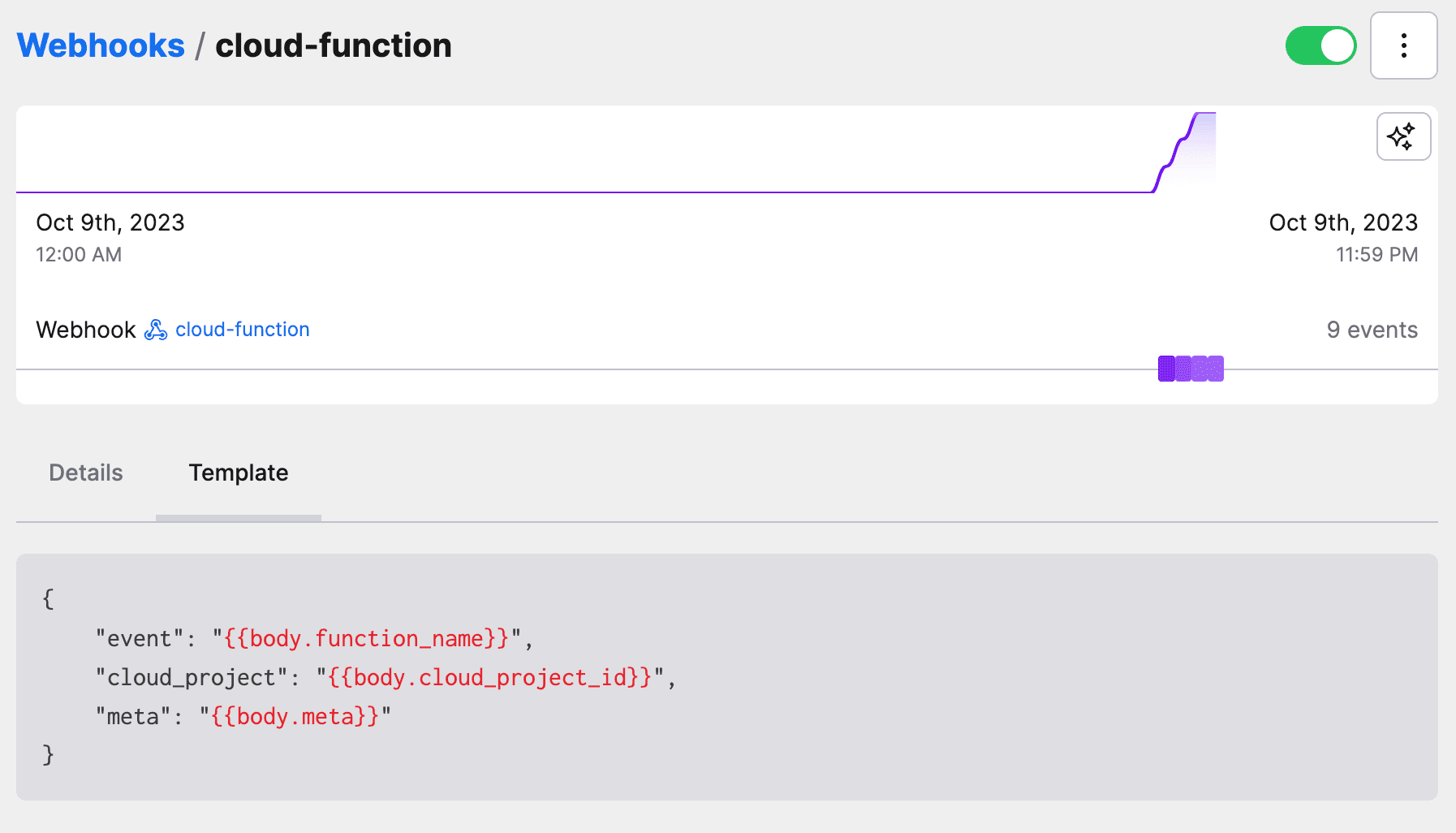

1. Create a webhook in Prefect Cloud. This webhook will be readily available to receive events from anywhere. Click “event webhooks” in the menu, then create a new one by clicking the “+” at the top. We are setting a custom trigger, where we are using the body to denote a function name, a cloud project, and whatever I want to include in the logs as a “meta” field.

Save the webhook resource ID (UUID in the URL) as you will need it for later steps.

The webhook is now generally available to call from anywhere, even with a simple

curl https://api.prefect.cloud/hooks/YOUR_WEBHOOK_URL which will create a webhook event in Prefect Cloud.

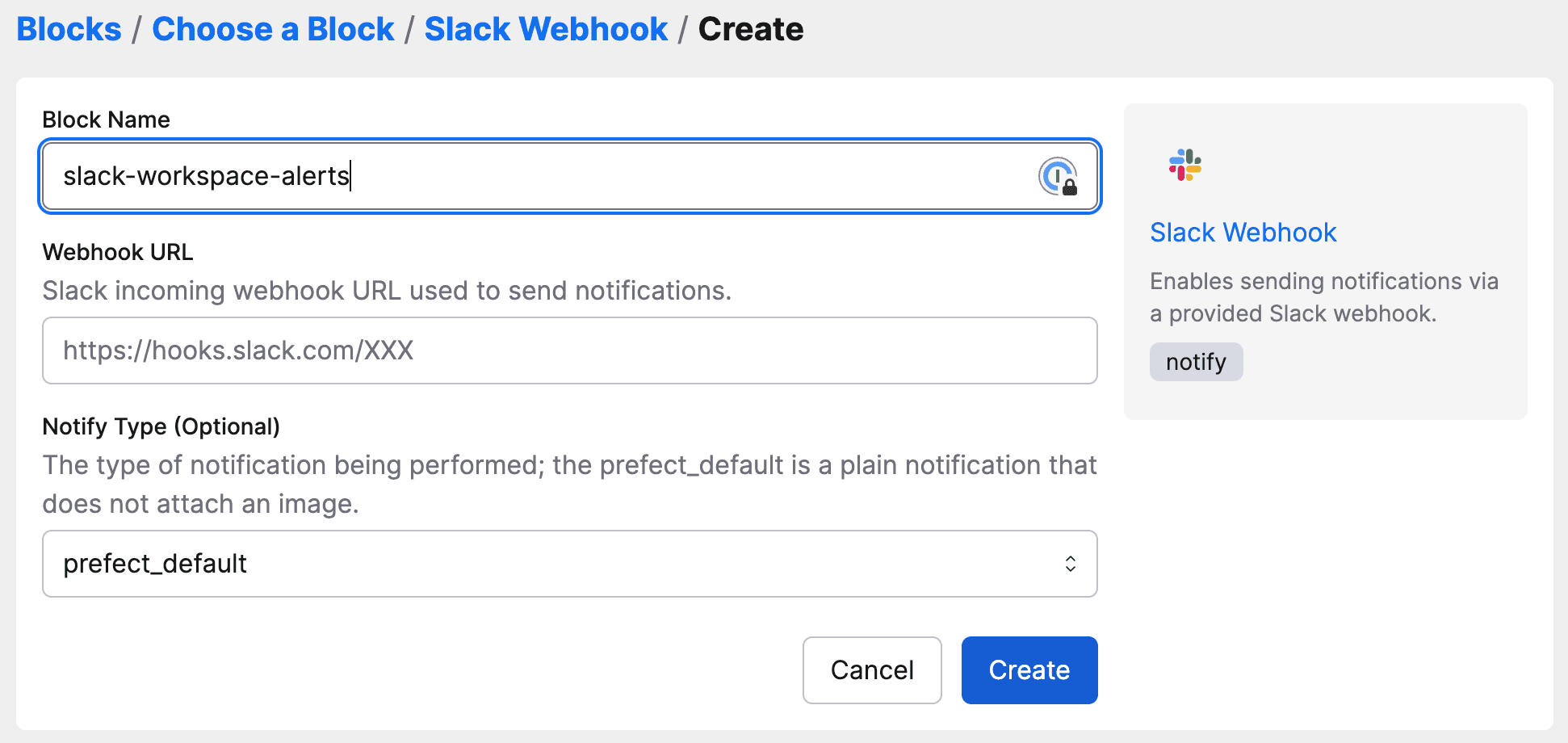

2. Create a Slack connection in your Prefect Cloud workspace. Click the “Blocks” menu item and create a new one by clicking the “+”, and select type Slack Webhook. The webhook URL will come from your Slack app.

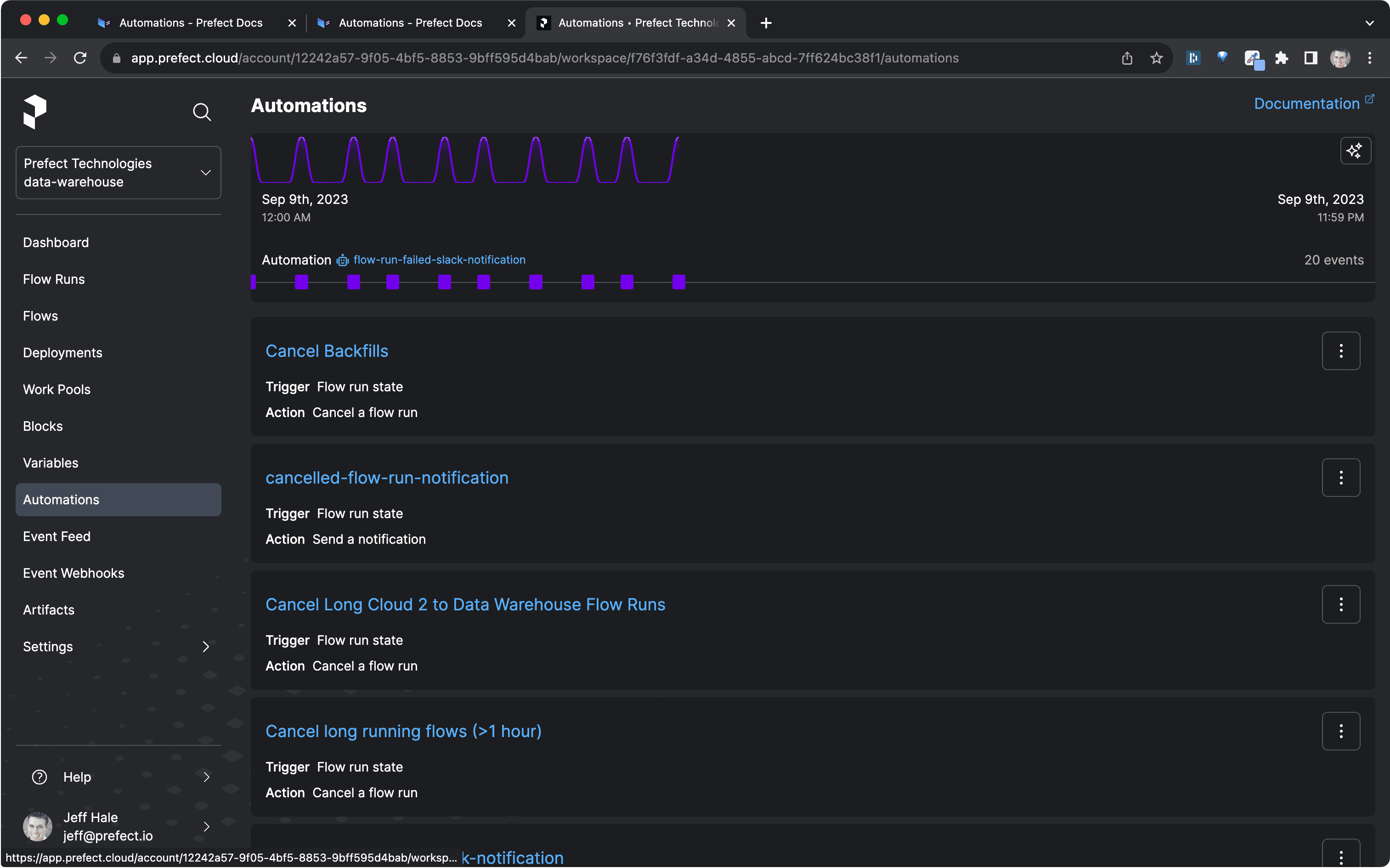

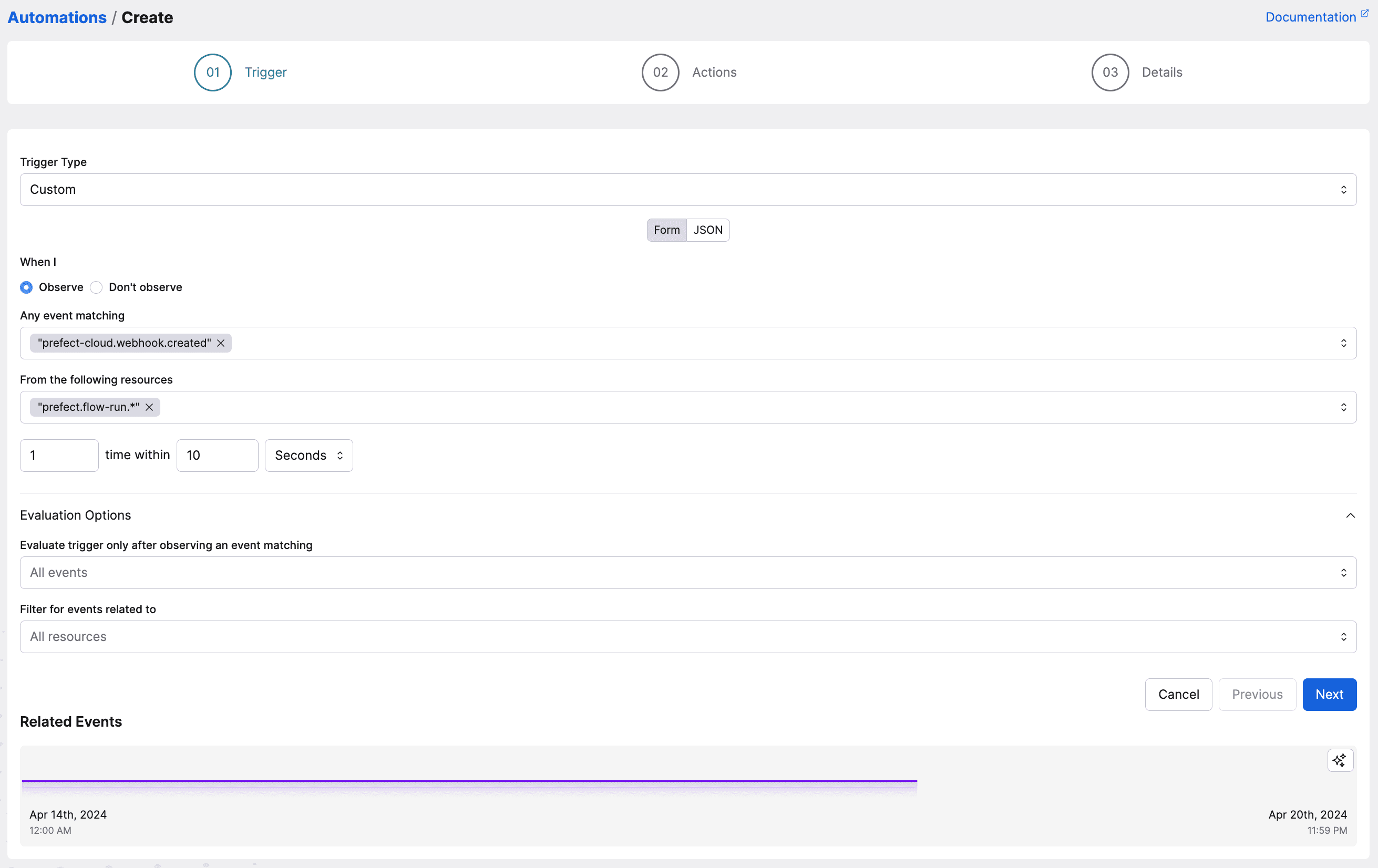

3. Create an automation in Prefect Cloud. The automation takes a trigger—the webhook you created in step 1—and will submit an action to occur. An automation can trigger virtually any behavior in Prefect Cloud: from running a Python script, to launching infrastructure, or just sending a Slack notification. The last piece is what we will implement here.

Click the “automations” menu item and the “+” to create a new one. The trigger type will be custom as we are relying on webhook events. A full spec can be found here. We will create an automation below that will fire if 1 hello_http event from the webhook hasn’t happened in 10 seconds.

- Prefect.resource.id: This is the webhook we created above. You can find this in the URL of the webhook.

- Expect: This is the event name we will be passing into the webhook (we’ve set this to be the function name).

- Posture: We will set it to be proactive, which means we are waiting for something to not happen to fire the automation.

- Threshold: This is how many of the events we expect to happen.

- Within: In seconds, the number denoted in threshold that we expect. For a proactive posture: something doesn’t occur in X seconds.

- Then, select the Slack block you created in step 2 for the action on the next screen.

Now, we’re all set up to use Prefect Cloud. Let’s now send data to the webhook to see what happens. But we want the setup to be interesting, and realistic, right? Right.

Take action when a GCP Cloud Function has not run

Monitoring has to be versatile to not only events in GCP, but also the lack of events. Additionally, you have to integrate existing systems like Slack, email, and other alerting systems to notify downstream stakeholders. So, we will send data directly to Prefect Cloud. The webhook we created above is just like any other webhook endpoint, which can receive data from anywhere.

1. Invoke your webhook in Python. The Python code you need to invoke your webhook is below:

webhook_url = "https://api.prefect.cloud/hooks/YOUR_WEBHOOK_URL"

webhook_data = {

"function_name": "hello_http",

"cloud_project": "gcp_dev",

"meta": request_json

}

req = requests.post(webhook_url, data=json.dumps(webhook_data), headers={"Content-type": "application/json"})**

2. Add the code to your function.** The default code for a Python function will populate if creating a new function, and all you need to add is the code above.

Note: the *requests* package isn’t in the standard Python library, so you have to add in the *requirements.txt* file. I personally lean on this package for its readability, but you can use *urllib* as illustrated below aswell.

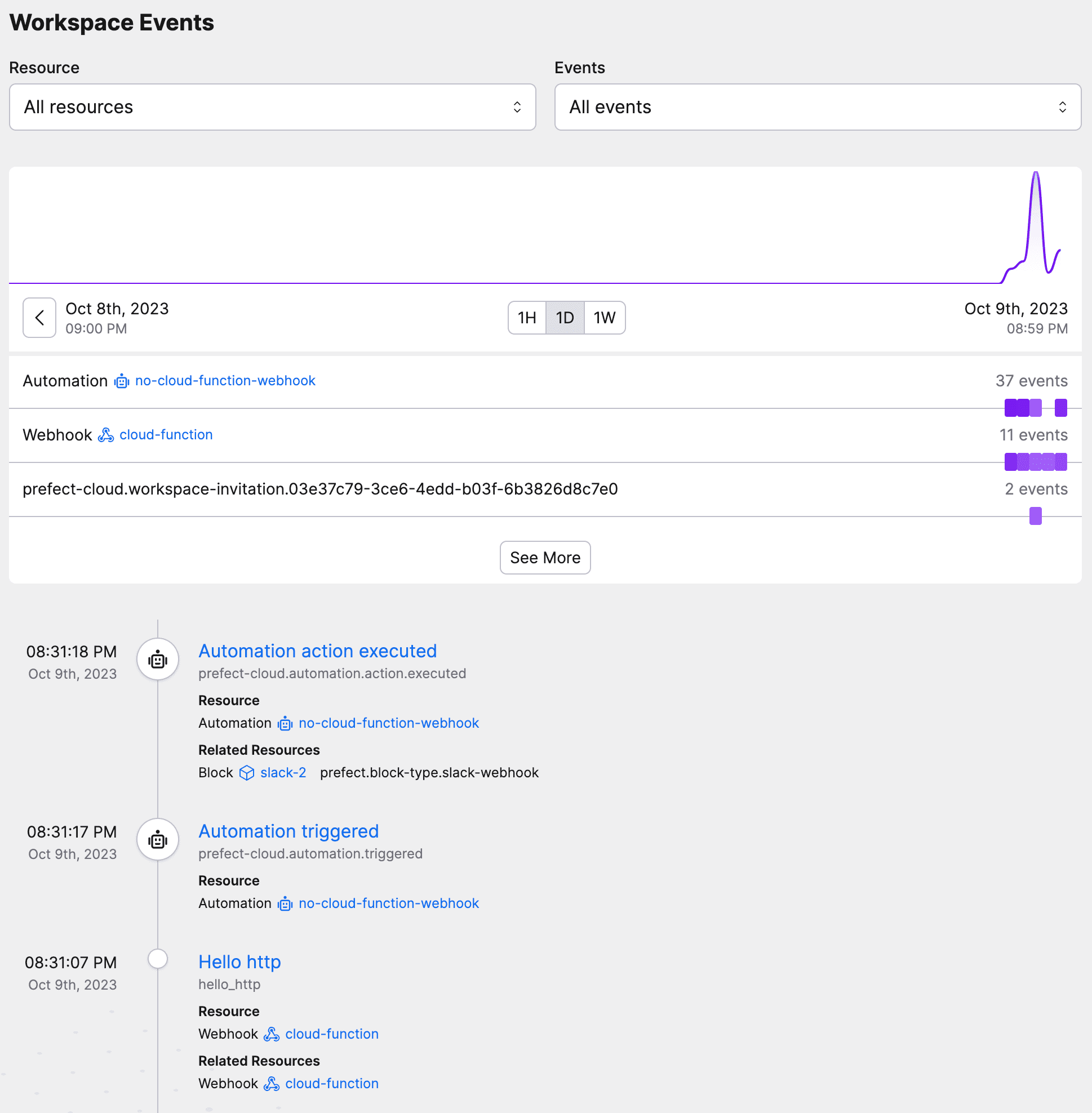

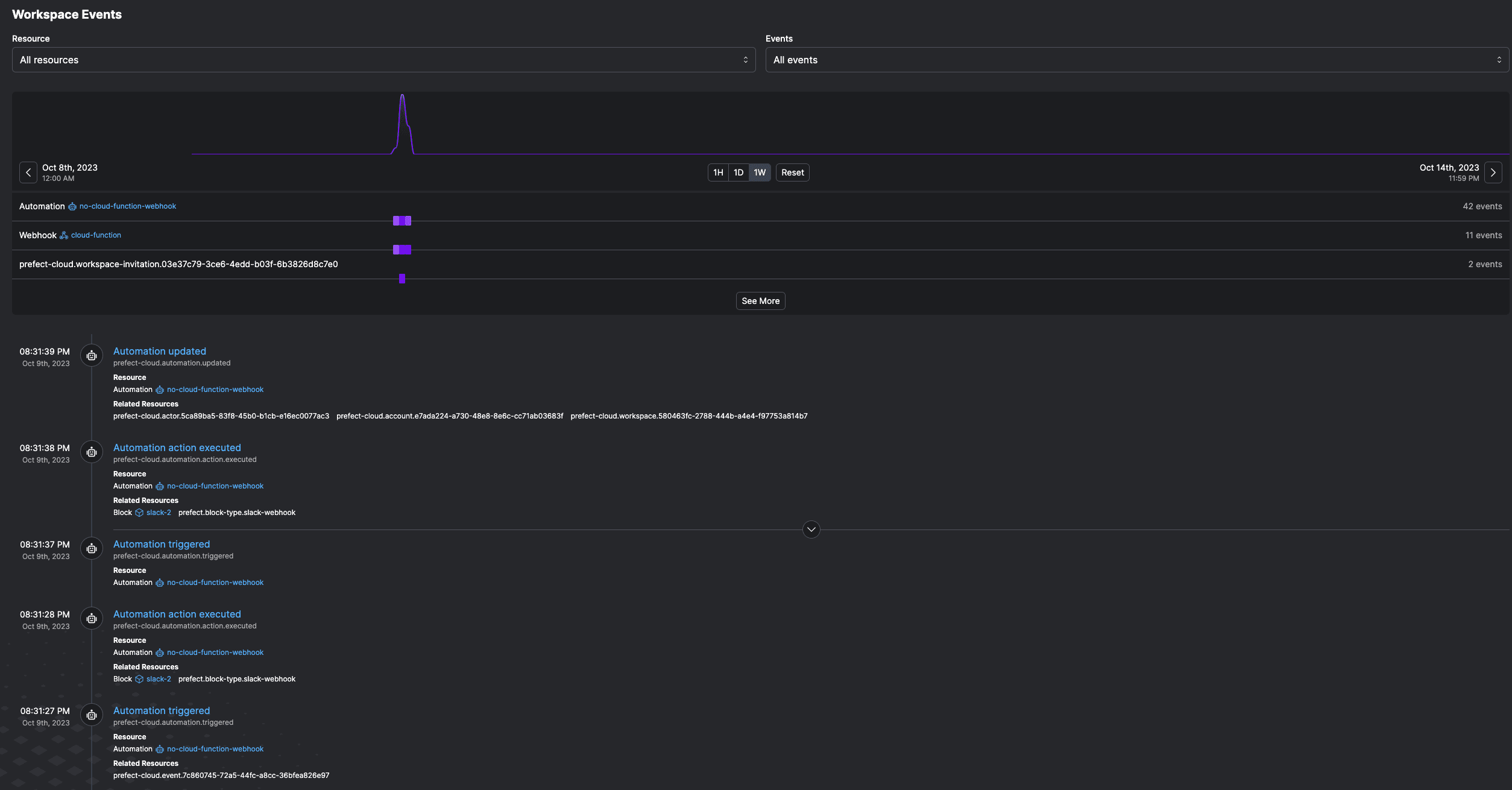

Voila! When we test the Cloud Function, it sends the hello http event to Prefect. You can see this in the event feed in Prefect Cloud. After 10 seconds, the automation we created is triggered—recall, the automation fires when the function has not fired in 10 seconds.

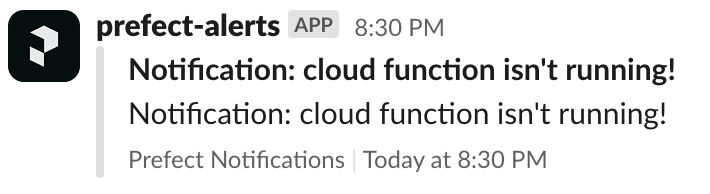

The automation executing means we get a Slack notification!

You can now truly observe your GCP Cloud Functions. Automations can also be used to run Prefect flows—any Python function in a script can be turned into a flow simply with the @flow decorator.

For instance, instead of using webhooks, you can make any Python function within your Cloud Function observable with Prefect by adding a @flow decorator. Outside of your Cloud Function, you may have a backup where a script can trigger the function so you have time to triage without blocking downstream tasks. This flow can run asynchronously, or depend solely on this automation to trigger it, without a schedule.

Alternatively, instead of waiting for something to not happen, we can take action when we get a specific type of event.

React to a CloudWatch log

Most AWS services send logs to CloudWatch automatically. However: Cloud Functions, AWS Lambda, ECS, they all suffer from the same thing—lack of observability, detracting a platform team’s ability to intelligently understand their operations and infrastructure. So, this example will use AWS to illustrate the value add of observability and taking action.

Logs that stay in CloudWatch fall deeper and deeper into a black hole unless they are reacted to.

Using a Lambda function to react to logs in CloudWatch is a common pattern in AWS, where a Lambda function itself is deployed and triggered by CloudWatch logs from any type of infrastructure, not just other Lambda functions.

👉 Consider this: your ECS cluster is down. Your EC2 instance is constantly restarting. How do you know this and react automatically? All of these resources send logs to CloudWatch. Use that to your advantage.

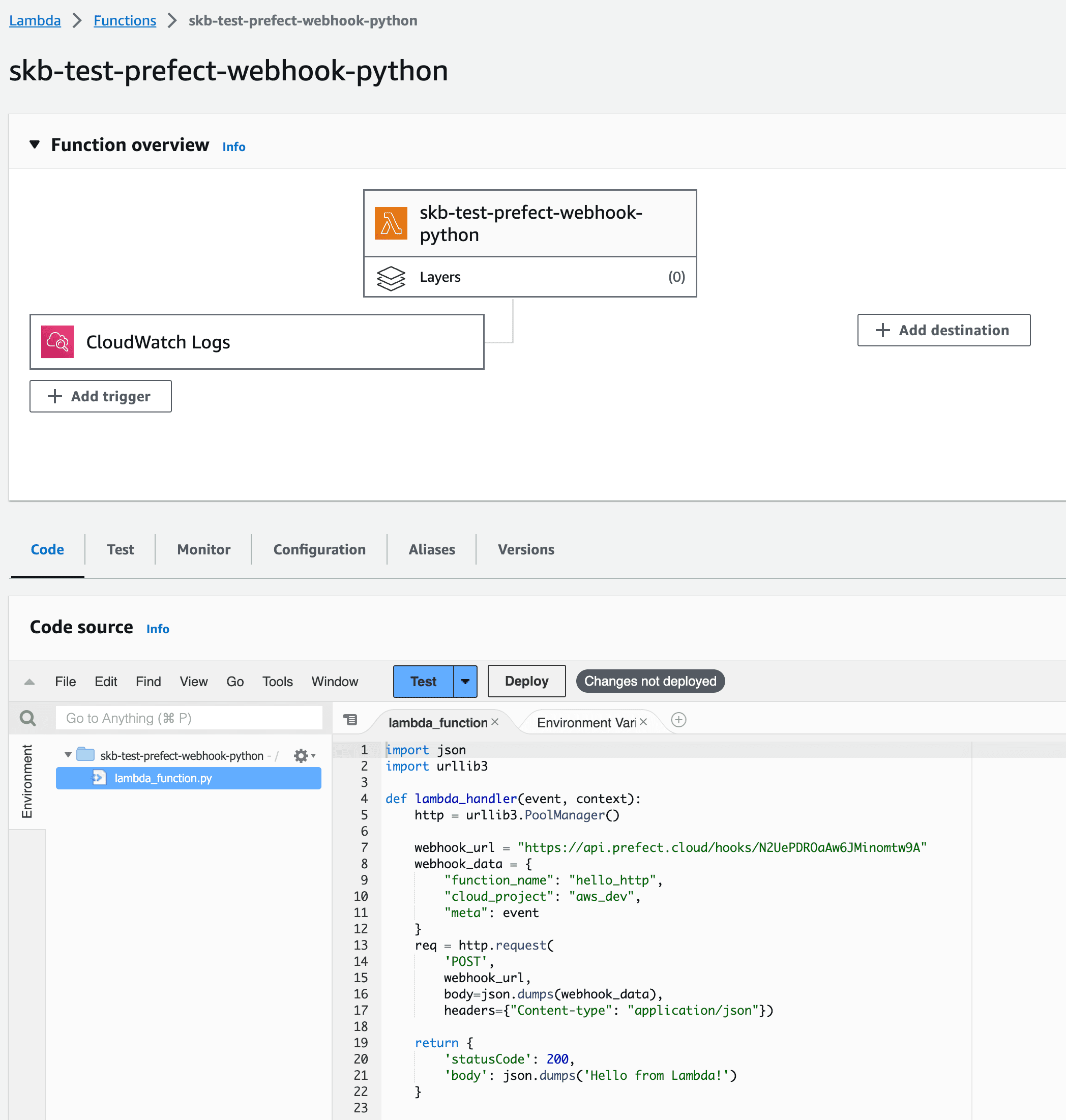

1. Create a Lambda function based on Python. We are using only pre-loaded Python packages (hence why we’re using urllib3 not the requests package like above). The function will have the same code, posting a request to the Prefect webhook.

2. Add a CloudWatch trigger to the function. You can set any filter you want—for alarms, or all logs. If you send all logs to Prefect Cloud, you can see them all in your event feed and react to any of your CloudWatch logs with different levels of severity. Filtering for severity can occur in Lambda or in Prefect.

The result: Prefect Cloud gets events from your CloudWatch logs. Those logs are now in the event feed in Prefect Cloud where you can see historical behavior as you debug any issues.

Moreover, any event and thus CloudWatch log is actionable. Build automations on top of the logs (events) to make sure all your AWS infrastructure is generating data, processing data, running, whatever you expect it to be doing.

And if it’s not, implement an ad-hoc back-up plan like running a Python script, kicking off a Terraform check, spinning up new infra, you name it.

Peaked curiosity?

Prefect Cloud elevates the open source prefect orchestration library with events, automations, security—everything you need to build up the information and intelligence of your infrastructure or data platform. Check it out for yourself.

Prefect makes complex workflows simpler, not harder. Try Prefect Cloud for free for yourself, download our open source package, join our Slack community, or talk to one of our engineers to learn more.