What is a Data Pipeline?

Data pipelines are an integral part of any data infrastructure, and the end goal of much data engineering work. Pipelines vary from scheduled point-to-destination data movement, to production-grade pipelines triggered by events with hefty observability requirements, and everything in between.

Data pipeline definition

👉🏼 **da·ta pipe·line **/ˈdadə,ˈdādə ˈpīpˌlīn/ noun

A data pipeline is a workflow that moves data from a source, to a destination, often with some transformation of that data included.

A basic data pipeline includes the source and target information and any logic by which it is transformed. The beginnings of a data pipeline typically originate in a local development environment, defined by a Python script or even a SQL statement. Executing something locally, or on commonly used data stores like a data warehouse, is often trivial and can be accomplished in little time.

What happens when you want to take your locally developed pipeline, and turn it into a resilient, production-grade data pipeline? How can you automate something to run, and how can you trust that it is running when you expect it to?

Production data engineering: it's never just one Python script

In most cases, data pipelines can be automated through the combination of scheduling or triggering, remote infrastructure, and observability. This gives you a way to control when the pipeline runs (triggering), a place for it to execute (remote infra), and the means to know that it actually ran (observability).

Components of data pipeline architecture

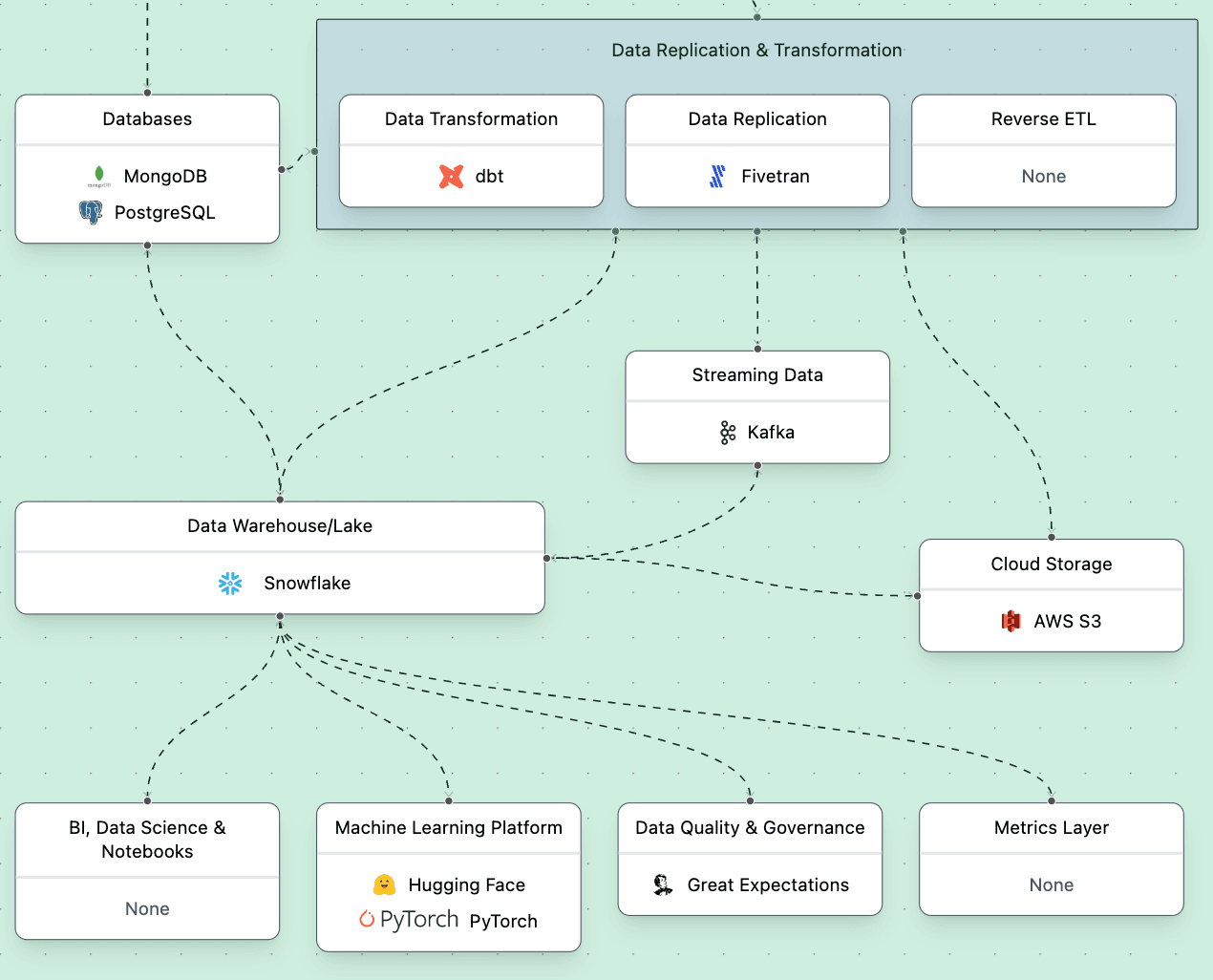

The core components of a data pipeline include integrations (the source and target data, and means of moving them) and transformations/training business logic. These can be extended or automated through scheduling, infrastructure, and observability.

Integrations

Integrations refer to how data is extracted from external sources and saved in a target system. APIs or different storage types, such as warehouses, databases, and blob stores can be employed to achieve this purpose. A combination of these integrations may also be used depending on the complexity of the project.

import yfinance as yf

# download data

data = yf.download(tickers='SNOW', period='3mo', interval='1d')Transformation

The transformation/training logic handles transformations or any kind of data manipulation. This section should be thoroughly planned so that each step functions harmoniously with every other step without any blunders being made along the way.

import yfinance as yf

import pandas as pd

# download data

data = yf.download(tickers='SNOW', period='3mo', interval='1d')

# Calculate the 50-day SMA for the stock using pandas

sma = data["Close"].rolling(50).mean()

# Print the 50-day SMA values to the console

print("50-day SMA: ", sma)Triggering/scheduling

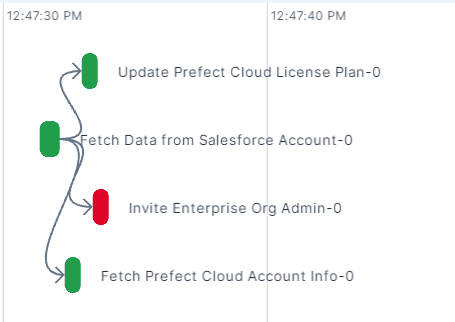

Triggering a data pipeline can be as simple as a cron schedule locally hosted. Cron is a basic service that does one thing well: scheduling. For production data pipelines, orchestration tools such as Prefect, Airflow, or Dagster can make your pipeline more resilient by handling complex scheduling, retries, and other failure logic.

While scheduling is a basic expectation of most workloads (think batch ETL), real-time, streaming, and event-driven pipelines are increasing in complexity. The real world is complex, and data engineers are increasingly forced to react to systems outside of their control where a schedule just won’t cut it.

Infrastructure

Infrastructure deals with where the actual pipeline will run; it could be hosted in a cloud setting or necessitate custom installation based on how complex the project is. Scalability is essential here; it must support both current workloads and potential future needs as your organization grows. Infrastructure is a highly varied and nuanced topic, extremely dependent on requirements and the tools that you may have access to. Examples of infrastructure include:

- A data warehouse like Snowflake, BigQuery, or Redshift

- A database like AWS RDS, Oracle, PostgreSQL, Db2, MongoDB, etc

- A serverless service like Google Cloud Run, AWS Elastic Container Service, Azure Container Instances, etc

- Kubernetes, self-hosted or managed via a service such as AWS EKS

While beginners to data engineering often start with basic data movement and manipulation in a data warehouse, they invariably end up with more complex data pipelines with a variety of infrastructure requirements.

Observability

Some of these orchestration tools also offer observability features that will allow you to understand the individual steps within your pipeline. For example, if one individual task within your pipeline fails, it may not be clear to you how to resolve the failure without granular observability. If several of your pipelines failed, observability features will also allow you to be aware of failure immediately and identify any commonalities across your work.

When constructing a data pipeline it’s vital to take into account each component attentively: from connecting sources with targets correctly through to deciding upon an ideal platform for running your code capably and guaranteeing that your transformation process follows proper protocols for effective performance across multiple environments if required. All these elements together form a dependable data pipeline architecture for long-term success in your company’s data strategy.

A note on dependencies

All pipelines have dependencies, both internal and external, which are necessary for them to function properly. These are important to consider when constructing a data pipeline: read more about them in our Head of Product’s blog post about workflow dependencies.

Building effective and automated data pipelines by situation

Data pipelines are transforming the way businesses operate, allowing them to automate manual processes, increase visibility into their operations, and scale up their infrastructure more easily. Through leveraging automation capabilities, companies can maximize efficiency and minimize costs while still ensuring that their systems are running smoothly.

The tricky part about building an effective data pipeline revolves around making your workflow repeatable and resilient. If the pipeline you have built is simple enough with straightforward infrastructure requirements and known dependencies, it may be easy to create. If your data pipeline involves complex infrastructure, complex dependencies and stitching together multiple systems, it may become more complex and require advanced infrastructure and orchestration capabilities.

By taking the time to understand how data pipelines work and what they can do for a business, companies can ensure that they make the most of this technology - unlocking new levels of productivity and success in the process.

Prefect makes complex workflows simpler, not harder. Try Prefect Cloud for free for yourself, download our open source package, join our Slack community, or talk to one of our engineers to learn more.