Airflow to Prefect: Your Migration Playbook

In Part 1 of this series, we explored why teams are moving from Apache Airflow to Prefect – from the pain of complex Airflow setups to the appeal of Prefect’s flexible, Pythonic workflows. Now, in this blog, we’ll focus on how to execute a smooth migration.

This guide will walk you through planning an incremental transition, provide technical tips (with code examples) to rebuild your workflows in Prefect, and share real-world success stories of companies that made the switch. By the end, you’ll have a clear roadmap for migrating to Prefect and know exactly where to go for next steps.

1. Plan Your Migration

Audit and Prioritize

Begin by cataloging your existing Airflow DAGs. Identify which pipelines:

- Have high business impact or cause operational pain.

- Are updated frequently (and therefore might benefit most from modern tooling).

- Could be easily migrated as a proof-of-concept.

This audit helps you decide which workflows to migrate first. Start small—migrate simpler pipelines and gradually tackle more complex ones.

Set Up Your Prefect Environment

Before rewriting your workflows, get Prefect up and running alongside Airflow. This may involve:

- Installing Prefect and launching Prefect self-serve or signing up for Prefect Cloud.

- Configuring your execution environment (local machine, Docker, or Kubernetes).

Having Prefect operating in parallel lets you experiment with new flows without disrupting your existing operations.

Prepare Your Team

Ensure that your team is ready for the transition:

- Conduct a brief training session on Prefect basics.

- Run a pilot migration on a non-critical DAG to build confidence and surface questions.

Having a plan with these elements will set the foundation for a smoother transition. Now let’s talk about executing that transition incrementally.

2. Incremental Adoption: Running Airflow and Prefect Side-by-Side

A common mistake is thinking you must switch everything from Airflow to Prefect overnight. In reality, an incremental approach works best. During the transition period, you can run Airflow and Prefect side-by-side – gradually moving workflows to Prefect without downtime or disruption. Here’s how to manage this hybrid period effectively:

- One Pipeline at a Time: Migrate and validate each workflow individually. For a given DAG in Airflow, deploy its equivalent as a Prefect flow. For a brief overlap, you might have the same pipeline in both systems – this is okay (and even useful for testing), as long as you don’t run them simultaneously on production data.

- Avoid Dual Maintenance: Plan to deprecate the Airflow DAG as soon as its Prefect flow replacement is stable. Maintaining business logic in two places for too long can double your work and cause confusion.

- Consistent Schedules and Alerts: During the migration, ensure your scheduling and monitoring is not fragmented. You might continue using Airflow’s alerting for DAGs still on Airflow, and Prefect’s notifications for flows already migrated. Alternatively, you can follow the steps to first observe Airflow with Prefect to benefit from unified task management from day 1.

- Keep Stakeholders Informed: Keep stakeholders informed about which pipelines are moving to Prefect. Clear communication prevents confusion if logs or notifications change during the migration.

- Short Transition, Long-Term Gain: The goal is to minimize the time you’re running two orchestrators. In practice, teams often complete the migration over a few weeks to a couple of months, depending on the number of workflows. During this transition, new pipelines run on Prefect while legacy ones continue on Airflow—ensuring a measured handover rather than maintaining two disconnected systems indefinitely

This incremental approach minimizes risk and allows teams to learn Prefect on simpler pipelines first. Next, let's look at how to rebuild your Airflow DAGs in Prefect.

3. Rebuilding Your Workflows in Prefect

Migrating to Prefect will often mean refactoring your pipeline code. The good news is that Prefect’s API is very ergonomic – many Airflow users find they can express the same logic with less code and more flexibility. Let’s break down the translation of concepts and walk through an example.

Note: Airflow 2.3+ supports dynamic tasks and @task decorators for in-line Python workflow definitions, similar to Prefect's syntax, though still requiring extra decorators for branching logic. Your Airflow version will determine available features.

DAGs ➡️ Flows: Replace Airflow DAGs with Python functions decorated with @flow. Each flow execution is a "flow run". Flows are just Python functions, making them easy to test and trigger programmatically. In fact, creating a flow run is as straightforward as calling the function, just like any other Python function. This means you can trigger and test flows programmatically with ease.

Tasks vs. Operators: Airflow typically requires using specific operator classes (e.g., PythonOperator, BashOperator) and tying them together. Prefect replaces that with Python functions decorated by @task. No boilerplate or operator classes needed - any Python code can be a task.

import httpx

from prefect import flow, task

@task

def get_url(url: str):

response = httpx.get(url)

return response.json()

@flow(retries=3, log_prints=True)

def get_repo_info(repo_name: str = "PrefectHQ/prefect"):

url = f"https://api.github.com/repos/{repo_name}"

repo_stats = get_url(url)

print(f"{repo_name} repository statistics 🤓:")

print(f"Stars 🌠 : {repo_stats['stargazers_count']}")Managing Context: There’s no need to implement Airflow’s BaseOperator or manage context—any Python code can become a task by adding the decorator. This makes your code much cleaner and easier to maintain. You can, for instance, call external APIs, query databases, or run Spark jobs within a Prefect task using standard Python libraries or Prefect’s collection of task integrations.

Data Passing (XComs no more!): One of the biggest developer experience improvements is how data is passed between tasks. In Airflow, if Task A needs to share data with Task B, you’d typically push an XCom and pull it in the downstream task. Tasks in prefect can return values directly to downstream tasks. For example:

processed_df = transform_data(extract_data())Prefect will ensure extract_data runs first and transform_data receives its output. No XCom setup or metaclasses needed!

Scheduling and Retries: In Airflow, schedule intervals are defined in the DAG, and retries are properties of tasks or DAG settings. In Prefect, scheduling is decoupled from code. Instead, we first deploy our flow and then schedule it. Instead of having all of this logic tied up and co-mingled with the data engineering logic, they are separate concerns that can be managed together or independently.

Schedule flows via Prefect's UI/CLI after deployment, keeping scheduling logic separate from your code, manage it in code, or manage it via the Prefect UI.

Example: Converting an Airflow DAG to a Prefect Flow

Let’s illustrate the migration with a simple example. Suppose you have an Airflow DAG that extracts data, transforms it, and then loads it (ETL). In Airflow, you might have something like:

Airflow DAG (sketch):

with DAG('etl_pipeline', schedule='@daily', ...) as dag:

extract = PythonOperator(task_id='extract_data', python_callable=extract_fn)

transform = PythonOperator(task_id='transform_data', python_callable=transform_fn)

load = PythonOperator(task_id='load_data', python_callable=load_fn)

extract >> transform >> load # define dependenciesThis defines three tasks and their order. To test any part, you’d likely have to trigger the DAG or run tasks in an Airflow context.

Prefect Flow (equivalent)

from prefect import flow, task

@task

def extract_data():

# ... (your extraction logic here)

return [1, 2, 3]

@task

def transform_data(data):

# ... (transform logic here)

return [x * 10 for x in data]

@task

def load_data(data):

# ... (load logic here, e.g., writing to DB)

print(f"Loaded {len(data)} records")

@flow

def etl_pipeline():

raw = extract_data() # call extract task

processed = transform_data(raw) # call transform task with output

load_data(processed) # call load task with output

# Trigger the flow (e.g., for testing or ad-hoc run)

if __name__ == "__main__":

etl_pipeline()What's happening here? The flow defines the execution order by passing task outputs as inputs. Prefect handles the sequence automatically. You can run the flow directly with etl_pipeline(), or test individual tasks (e.g., transform_data.fn([1,2,3])) for easy debugging and unit testing.

A few things to note in this Prefect example:

- We didn’t specify any schedule in code. We could deploy this flow and then configure a daily schedule in Prefect Cloud, via a declarative prefect.yaml or via

prefect deployment buildCLI with a cron expression. This separation of concerns makes the code more reusable. For example, you could run it ad-hoc, in development, or on a different schedule without code changes. - Because tasks return data normally, we could manipulate

raworprocessedin the flow function just like any Python variables (e.g., do an extra check, transformation, or logging). - If one of these tasks fails, Prefect will retry it (if configured), and you can observe the state of each task in the UI or in logs. Prefect’s error handling is very robust – for instance, you can set up notifications or examine rich error messages in Prefect’s interface, which is part of why companies like Endpoint chose Prefect for its fault tolerance and observability.

With this example in mind, you can start converting your Airflow DAGs to Prefect flows one by one. Focus on writing clean, testable task functions - without worrying about the DAG structure.

Next, we’ll address how to handle the execution environment and scheduling in Prefect, which corresponds to Airflow’s concept of executors and workers.

4. Adapting Your Infrastructure: From Airflow Executors to Prefect Work Pools

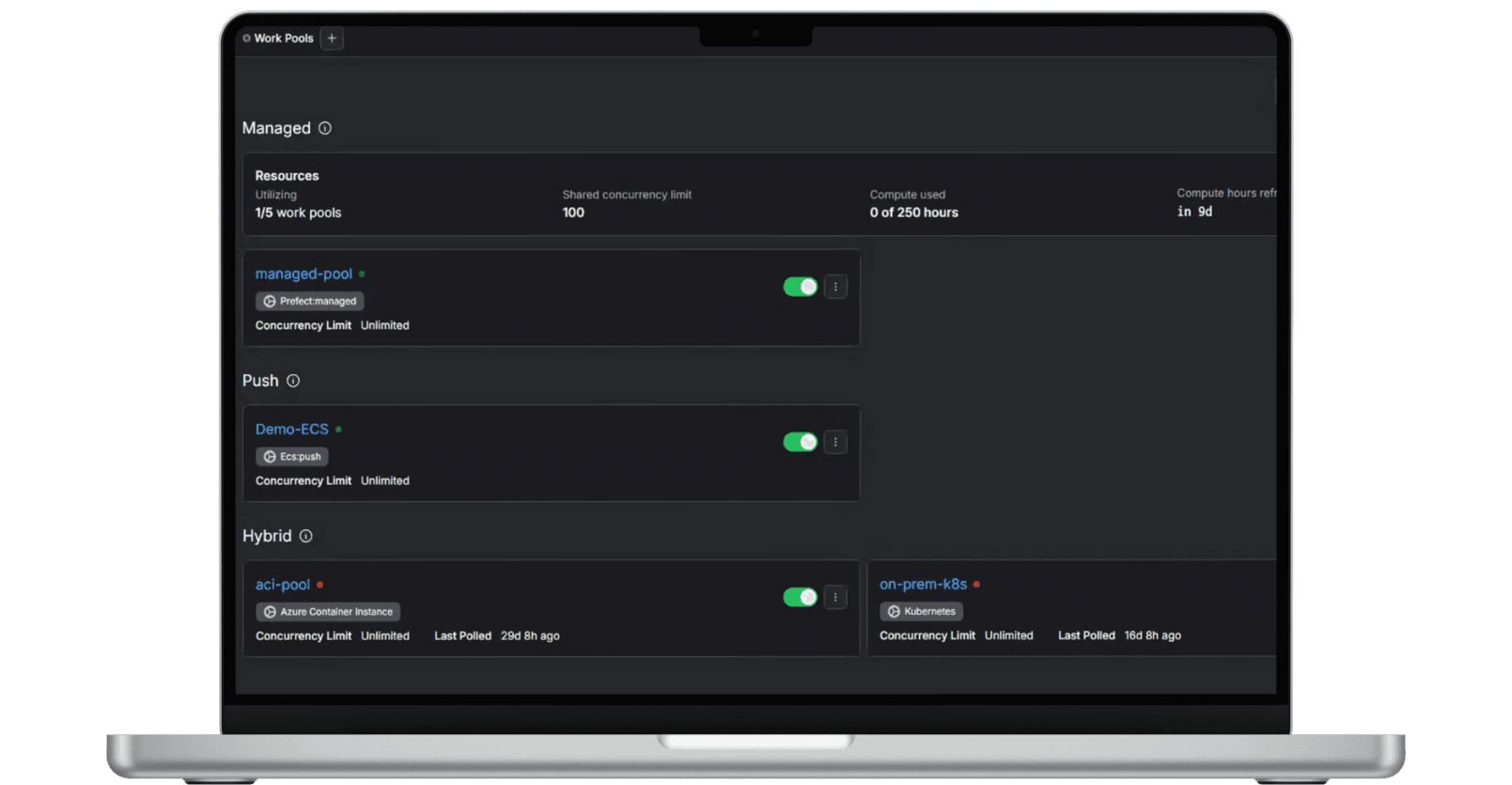

One concern when migrating is infrastructure compatibility. Airflow uses executors to determine how tasks are executed (e.g. LocalExecutor, CeleryExecutor, KubernetesExecutor, etc.). Prefect instead uses work pools,workers, andtask runners to parallelize and scale tasks across infrastructure.

Note: In Prefect, individual flows can run on separate work pools without requiring an additional Prefect instance. You can assign different work pools to different flows, making it easy to scale without additional operational overhead.

Here's how Airflow setups map to Prefect:

- **Airflow LocalExecutor → Prefect Local Work Pool or **subprocess: If you want to run tasks locally, Prefect’s default settings can accomplish this. Achieve local parallelism using Prefect's task runners. Use

ConcurrentTaskRunnerfor threading orDaskTaskRunnerfor local clusters. For example:@flow(task_runner=DaskTaskRunner()).

Work pools can also run flows locally using the process default, replacing Airflow's scheduler+worker functionality directly.

- Airflow CeleryExecutor →Prefect Workers** on Multiple Nodes**: If you used CeleryExecutor, you likely had multiple worker servers to distribute tasks. You can deploy Prefect workers across machines with "process" or "docker" work pools. Workers execute flow runs without requiring message brokers or result backends. Prefect's work pools provide granular control over job scheduling, similar to Celery queues. And what’s more, Prefect’s deeper integration with tools like Dask means “you can forget about Celery” for most parallelism needs.

- Airflow BashOperator or SSHExecutor → Prefect Remote Execution: Some Airflow setups run tasks on remote machines via SSH or by triggering jobs on other systems. Prefect’s approach here would be to use the appropriate integration, or you could use Prefect’s ability to call any Python code (which could include Paramiko or other SSH library calls). Prefect tasks can also leverage APIs/SDKs for remote systems. In short, anything you did in a custom Airflow operator, you can likely do with a Prefect task (often with fewer lines of code). If you need to orchestrate outside services, Prefect’s extensibility has you covered.

- **Airflow KubernetesExecutor → Prefect **Kubernetes Work Pool: Airflow’s K8s executor launches each task in its own Kubernetes pod, which is great for isolation but can be complex to configure. Prefect offers a Kubernetes work pool that provides similar capability – each flow run can spawn as a Kubernetes job/pod with a designated CPU/memory definition. This achieves K8s scalability without Airflow's infrastructure overhead.

Here is an example of using this executor in Airflow from the Airflow documentation:

try:

from kubernetes.client import models as k8s

except ImportError:

log.warning(

"The example_kubernetes_executor example DAG requires the kubernetes provider."

" Please install it with: pip install apache-airflow[cncf.kubernetes]"

)

k8s = None

if k8s:

with DAG(

dag_id="example_kubernetes_executor",

schedule=None,

start_date=pendulum.datetime(2021, 1, 1, tz="UTC"),

catchup=False,

tags=["example3"],

) as dag:

# You can use annotations on your kubernetes pods!

start_task_executor_config = {

"pod_override": k8s.V1Pod(metadata=k8s.V1ObjectMeta(annotations={"test": "annotation"}))

}

@task(executor_config=start_task_executor_config)

def start_task():

print_stuff()As seen in this Airflow Kubernetes Example, developers must understand both the Kubernetes executor and provider to orchestrate workloads within pods. This tight coupling of code and deployment details adds complexity and increases maintenance overhead.

With Prefect, the separation of code and deployment ensures that your code remains DRY, free from Kubernetes-specific configurations. Instead of embedding deployment logic in your business code, you define work pools that dynamically execute tasks based on deployment configurations—not the code itself. This clean separation allows platform teams and data engineers to work with a streamlined, well-defined interface.

Prefect's work pools support all common executor patterns and can be managed via the UI, CLI, or API, eliminating the need for static config files. This separation of concerns accelerates development while enabling teams to optimize execution strategies without modifying application logic.

Real-World Results After Migrating

Many teams have transitioned from Airflow to Prefect and seen significant improvements. Here are a few brief success stories that highlight what’s possible:

- Endpoint: After migrating, tripled workflow throughput and reduced costs by 73%. Their team reported significantly better error handling and issue resolution.

- Cash App: Moved their ML platform from Airflow to Prefect for better Python integration. This enabled faster iteration on model training workflows and more flexible deployment of fraud detection models.

- LiveEO: Processing petabytes of satellite data, achieved 65% reduction in pipeline runtimes and costs through Prefect's optimized parallel processing.

These examples show that migrating to Prefect can transform workflow orchestration across various use cases, from ETL to machine learning pipelines, often with immediate positive results.

Next Steps: Ready to Migrate?

Migrating from Airflow to Prefect might feel daunting, but with the right approach, it can be incredibly rewarding. By now, you should have a clearer picture of how to go about it and why it’s worth the effort.

If you’re ready to take the next step, check out our webinar - Observing Airflow DAGs in Prefect to learn about how to aid your migration by starting with observability.